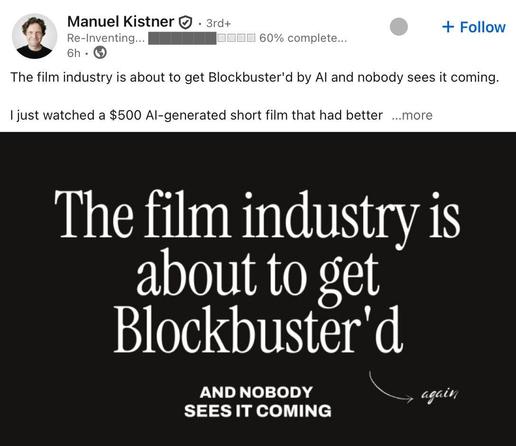

I think it will start to be integrated in real movies, no matter the quality differences.

The thing that he is wrong about is the death of Hollywood: they are going to be the ones doing it.

It can be done, and somebody will do it. However, rendering individual scenes or a five minute music video is one thing, creating a film that is 90-120 minutes long and doesn't bore the audience to death is quite another. While a text generator can create a rough first draft of a screenplay, it still needs a lot of manual work to turn it into something halfway decent. The main problem is that current AI models don't understand anything, they just learn to spot recurring patterns in the training data and synthesise new data with those patterns. And then there is the problem of sheer raw computing power: AI only seems cheap because there are investors pouring billions of dollars into computing centres, but once you pay for it all by yourself, you will find that it is either quite expensive or very slow. You can run AI models on your own hardware, but a €2000 graphics workstation or gaming rig with the latest Nvidia GPU is barely enough to run a very small LLM, and while it can generate a still image with Stable Diffusion or Flux within seconds, it still needs to run for many hours to produce a few seconds of video. If you have a more humble PC or laptop, it will take hours to render a still image, and you can totally forget about video or text.

Since our own brains need significantly less power than a high-end PC, it looks like there should be more efficient hardware architectures for running artificial neural networks. With our current digital neuron models, we won't ever reach any level of intelligence that's even close to the human brain, and by trying so, we are wasting resources.

So #AI isn't going to end Hollywood, but it gives us new tools. Video and audio generators aren't going to replace actors and movie sets and film crews, but they give us new options for SFX and postprocessing. Voice actors dubbing movies in different languages might be a thing of the past soon, since we can now let the actors speak any language in the world in their own voice (with resynth'd video for natural looking lip movement). Prop makers will have less to do since generative 3D models can make a lot of variations of a single 3D object, with 3D printing to turn them into actual objects that only need some assembly and some paint, which means that a much smaller number of prop makers can make more props faster. AI tools are becoming part of all kinds of software. In fact, machine learning and artificial neural networks have been part of graphics software like Photoshop for quite a while, how else do you think content aware fill works?

The idea that we can just replace all the humans with AI in the very near future is bullshit, of course, it isn't going to happen with the kind of hardware and software we have. We can, however, have fewer people do more work faster, just like we can with any other kind of power tool. Just like a lumberjack with a chainsaw can do the work of a whole crew of lumberjacks with simple saws, a 3D modelling artist working on a computer game can now make an object, have the AI generate dozents of variations, and then select the best of those for some final manual adjustments if needed.

It's also impossible to train computer vision/image recognition models to tell AI generated images apart from human made ones. It simply isn't possible, unless there are some obvious artifacts which any human can spot as well. Anybody who tells you anything else is just trying to sell AI detector snake oil.

@canleaf @NicholasLaney @Daojoan People often think I'm quoting from some book or otherwise repeating something from memory when they hear me talk about any special interest of mine, and I'm basically interested in anything computer-related, except for multiplayer games (I like to play my games alone and offline, for me the entire point of computer games is that I don't need any others to play them), but apart from computer science and engineering I'm also interested in Physics and Chemistry and Biology, in all kinds of complex systems both natural and man-made, in science fiction and fantasy stories across all kinds of media (novels, role playing games, comics and manga, anime and Western animation, TV drama and cinema), building and repairing simple analogue electronic circuits, making weird (and often erotic) art out of found objects...

As long as I can remember, I have always been fascinated by artificial neural networks, even when they were still very primitive and couldn't do very much. I have also always liked machine generated art, and nobody will ever be able to convince me it isn't "art" just because no human made it. I think everything can be art, depending on how you look at it, even if it's just a piece of rubbish, some mass plastic object that used to be part of some broken device or maybe just a packaging part from my 3D printer. Just turn it so that it shows its most interesting angle and mount it on the wall.

"Neural networks" (unless we're talking chemical based) are still very primitive and cannot do very much.

One thing, that comes to my mind, is ABBA, who got their "ABBAtars" created with help of a myriad of cameras that recorded their movements and "attached" (in lack of a better word, sorry) to their artificial counterparts.

Quite interesting how that was brought to live.

Another thing is that I saw a video a week or so ago, about an AI-model that generated near-perfet lip-sync video from a static portrait picture.

It still has its flaws, but it was impressive nonetheless.

So, it's already possible and will become more widespred in the time to come.

@NicholasLaney @Daojoan

Well, I suppose eventually they will be able to phase out any kind of "halluzination / shapeshifting".

@Daojoan @NicholasLaney