"I used AI to....", is nothing more than, "Listen I'm not an asshole but....", for the 21st Century.

Reality is at fault.

@glynmoody

I was one of the first to study #AI in college back in the mid-80s.

What people refer to as "AI" today is nothing more than "Deep Database Scrubbing" (which is why it requires so much power.)

It constantly scours billions of data sources and "makes assumptions" based on the number of links/connections between two bits of info. Garbage_In/Garbage_Out.

It doesn't test for accuracy, and the more unreliable the sources, the more unreliable the conclusions.

Collapse is inevitable.

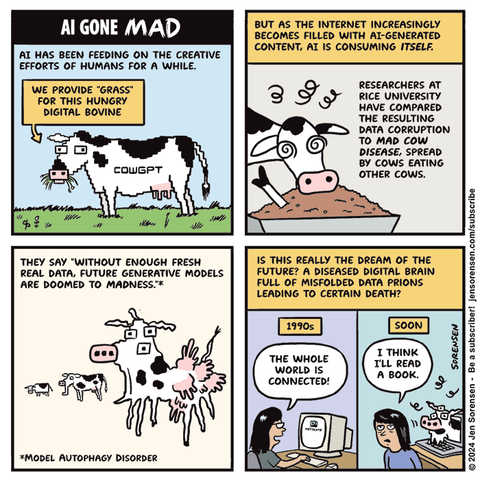

@glynmoody Sounds like what @jensorensen explained beautifully in this cartoon…

@glynmoody @stuartl @jensorensen

My analogy was inbreeding, but mad cow is similar.

https://mstdn.social/@stekopf/114581174083947454

Sepia Fan (@stekopf@mstdn.social)

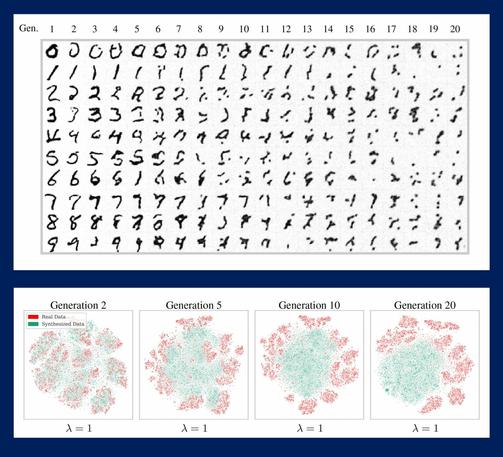

Attached: 2 images @jensorensen@mastodon.social Thank you. I ended up at the University page: "Self-Consuming Generative Models Go MAD" https://news.rice.edu/news/2024/breaking-mad-generative-ai-could-break-internet The subtitle "Training #AI systems on synthetic data could include negative consequences" is quite the understatement if one looks at the left image. 🤪 Left image shows #LLM deterioration when trained with artificial data. Right image shows the same when trained with prepared artificial data (see link for details). Even there 20% of the info gets distorted quickly.

Not exactly the sharpest tool in the shed isn't he?

The reason why ai search isn't working properly anymore is in the /robots.txt files. Except on The Register. 😄

@daz @NicholasLaney @glynmoody

The scrappers haven't been following robots.txt for a while.

https://www.technologyreview.com/2025/02/11/1111518/ai-crawler-wars-closed-web/

I think the only way people can speed up the collapse is by finding as many ways to poison the content they create as possible. AI devs will never voluntarily limit AI. It needs to be made a nightmare for them create training sets.

@KimSJ @glynmoody Yep. It should be obvious to anyone who isn't susceptible to hype, that flooding the internet with slop will only poison the training data for future iterations of genAI, degrading the quality of their output over time. The models will increasingly end up feeding on *each other's* error-strewn output.

These shitty LLMs we have now? They're the pinnacle of how good such models will ever get, no matter how many more billions are "invested" in their development.

@ApostateEnglishman @KimSJ @glynmoody

we used to call it GIGO

@samiamsam @KimSJ @glynmoody Yep. Only in this case, on an industrial scale - and then we feed all that garbage *back in* to the machines and get even worse garbage back out.

We keep doing this until everything is garbage.

I proposed at an early point in this farce that regulators should mandate that any content produced by AI must be tagged as such. This would have allowed future AI iterations to disregard it. Sadly, I think that ship has well and truly sailed now.

@chris_e_simpson

They may well have to do that anyway, to stop it marking its own homework; IIUC people are beginning to see its search functionality starting to degrade.

Makes me think of this:

@glynmoody They have just about reached the limits of how much training they can use with existing data collection methods and artificial refinement methods.

The irony is, one way they could exponentially improve their model training is the one thing that still won't occur to them: pay people to actually write stuff for it with consistent quality and formatting instead of just stealing data from all over the Internet. They could also get higher quality out of smaller models. And then they wouldn't have to pay Meta/etc to help steal data for them.

But they'll die before they'll consider doing it that way, lol. And that may just happen at the rate they're going. I just hope the pretend LLMs are AI when they aren't crash doesn't take others out with it.

@glynmoody Not really, no.

I'm talking about actually paying people to sit down and write actual training data. I don't just mean taking journalist's articles and feeding them in or that sort of thing. I mean writing data specifically for the models.

@madrobin Huh. I can't tell the goldfinches apart from pics, and asked at Wild Birds Unlimited and got an answer that doesn't always apply.

I only know I have lessers because the Merlin app id's their vocalizations.

How *do* you tell them apart?

Hands up anyone that needs a kettle connected to the internet to act as a security risk...

Cos it's no good for anything else u

@glynmoody Interesting that the article's examples of AI "getting worse" are actually AI doing exactly what it was designed to do: create passable language summaries of the crap that's out there.

What they want it to do—separate truth from fiction, valuable data from useless data—is not something it was designed to do nor is it capable of doing. That requires the hard work of research (journalistic, academic, professional) that people seem to naively believe they can skip if they use AI to write their papers for them.