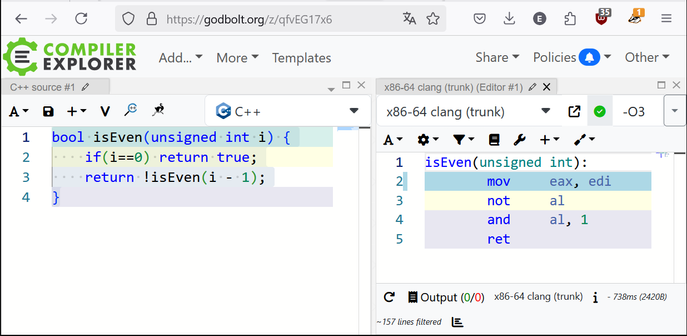

@ela This is quite impressive, and I wonder how complex you could make these kinds of functions while still having this kind of optimisation happening.

But it does make me ask: Should perhaps the compiler warn about these cases? I mean, it's great that the compiler can optimise it, but when it can, the code should probably be simplified, and that would be better than relying on the compiler.

I would expect that this is the combination of a few things:

First, the compiler will turn a tail-recursive function into a loop. This is a fairly trivial transform (Python explicitly prohibits it because it loses backtrace information)l it’s just replacing the tail call with a branch to the entry point.

After that, you have a simple loop. There will be a bit of canonicalisation to make it easier to analyse.

My guess is that this would then be handled by scalar evolution, which tries to model outputs from the loop as polynomials computed from the induction variable. Note that the loop has no side effects. This means each value in each iteration can be calculated from the previous one, which means that there must be a function that goes from the induction variable to the input to the output, so you’re just solving for that.

This particular case might be simpler because the value at the end depends on a single bit in the input, so it might be that known-bits analysis gives you the answer.

If you pass -mllvm -print-after-all to Clang, it will print the LLVM IR after each pass has run, so you can see all of the intermediate steps.