Looks like Corporate #infosec has made it's choice.

#RSAC is filled with talks embracing AI and making it "secure".

And they invited and encouraged the Trump regime to spread its disinformation - fully sanctioned and encouraged by the conference leadership(and by conference attendees who laughed at the regime's jokes and lies and issued no challenges or stands during the talk).

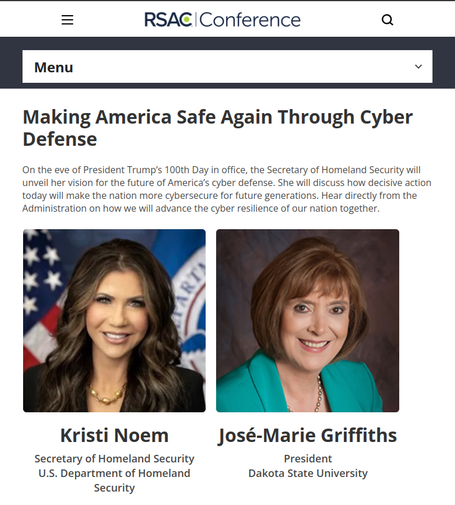

With the ostracization of #ChrisKrebs by industry and the full embrace of Kristi Noem as a speaker, this was the moment that infosec made its bed.

Y'all lie in it now.

:EA DATA. SF:

:EA DATA. SF: