https://onemillionchessboards.com/

https://onemillionchessboards.com/

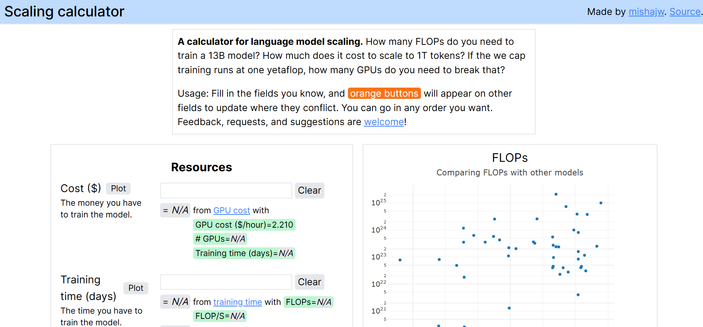

Scaling calculator

A calculator for language model scaling. How many FLOPs do you need to train a 13B model? How much does it cost to scale to 1T tokens? If the we cap training runs at one yetaflop, how many GPUs do you need to break that?

'Scaling ResNets in the Large-depth Regime', by Pierre Marion, Adeline Fermanian, Gérard Biau, Jean-Philippe Vert.

http://jmlr.org/papers/v26/22-0664.html

#scaling #deep #gradients

#EclipseStore hands-on? We got you.

Join Florian Habermann & Christian Kümmel for two #workshops—one for getting started, one for #scaling to the stars.

In-memory magic, #cluster ready.

🎟️ €19 each → https://2025.europe.jcon.one/tickets

'Scaling Data-Constrained Language Models', by Niklas Muennighoff et al.

http://jmlr.org/papers/v26/24-1000.html

#datablations #epochs #scaling

"The vast investments in scaling... always seemed to me to be misplaced."

"The premise that #AI could be indefinitely improved by #scaling was always on shaky ground… In November last year, reports indicated that #OpenAI researchers discovered that the upcoming version of its GPT large language model displayed significantly less improvement, and in some cases, no improvements at all than previous versions did over their predecessors."

https://www.troyhunt.com/closer-to-the-edge-hyperscaling-have-i-been-pwned-with-cloudflare-workers-and-caching/

https://www.seangoedecke.com/difficulty-in-big-tech/

http://mikehadlow.blogspot.com/2012/05/configuration-complexity-clock.html

Can reinforcement learning for LLMs scale beyond math and coding tasks? Probably

https://arxiv.org/abs/2503.23829

#HackerNews #reinforcementlearning #LLMs #scaling #math #codingtasks #AIresearch

Expanding RL with Verifiable Rewards Across Diverse Domains

Reinforcement learning (RL) with verifiable rewards (RLVR) has shown promising results in mathematical reasoning and coding tasks where well-structured reference answers are available. However, its applicability to broader domains remains underexplored. In this work, we study the extension of RLVR to more diverse domains such as medicine, chemistry, psychology, and economics. We observe high agreement in binary judgments across different large language models (LLMs) when objective reference answers exist, which challenges the necessity of large-scale annotation for training domain-specific reward models. To address the limitations of binary rewards when handling unstructured reference answers, we further incorporate model-based soft scoring into RLVR to improve its flexibility. Our experiments show that a distilled generative reward model can serve as an effective cross-domain verifier, providing reliable reward signals for RL without requiring domain-specific annotations. By fine-tuning a base 7B model using various RL algorithms against our reward model, we obtain policies that outperform state-of-the-art open-source aligned LLMs such as Qwen2.5-72B-Instruct and DeepSeek-R1-Distill-Qwen-32B by a large margin, across domains in free-form answer settings. This also strengthens RLVR's robustness and scalability, highlighting its potential for real-world applications with noisy or weak labels.