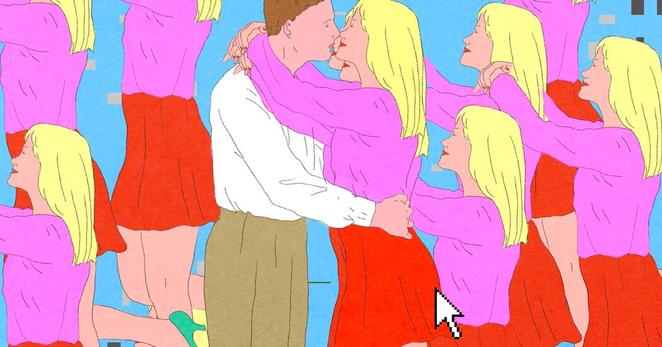

"Leaving consumers the choice to engage intimately with A.I. sounds good in theory. But companies with vast troves of data know far more than the public about what induces powerful delusional thinking. A.I. companions that burrow into our deepest vulnerabilities will wreak havoc on our mental health and relationships far beyond what pornography, the manosphere and social media have done.

Skeptics conflate romantic A.I. companions with porn, and argue that regulating them would be impossible. But that’s the wrong analogy. Pornography is static media for passive consumption. A.I. lovers pose a far greater threat, operating more like human escorts without agency, boundaries or time limits.

Governments should classify these chatbots not simply as another form of media, but as a dependency-fostering product with known psychological risks, like gambling or tobacco.

Regulation would start with universal laws for A.I. companions, including clear warning labels, time limits, 18-plus age verification and, most important, a new framework for liability that places the burden on companies to prove their products are safe, not on users to show harm.

Absent swift legislation, some of the largest A.I. companies are poised to repeat the sins of social media on a more devastating scale."

https://www.nytimes.com/2025/11/17/opinion/her-film-chatbots-romance.html

#AI #GenerativeAI #Chatbots #AICompanions #AISafety #AIEthics