I understand the “I am morally opposed to using AI” person much better than the “I won’t use it until it’s perfect” person.

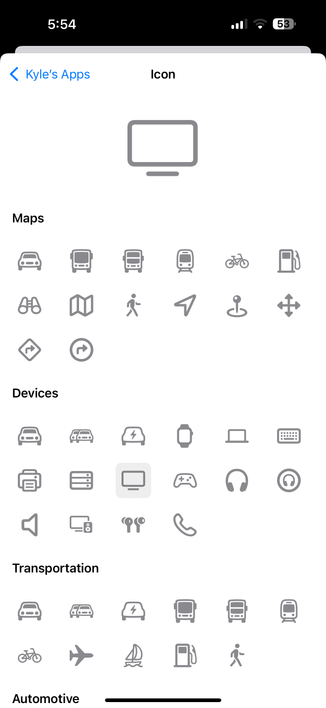

It took me so long to manually recreate the icon selection screen from Shortcuts, along with all of its categories.

My use and discussion of AI systems is not an implicit endorsement of them or their means or their ends or their benefactors. I just don't trust anyone else to judge the field, figure out the trajectory, identify what is useful, articulate what heavy exposure does to one's impulses and thoughts, etc. I think having a good handle on those aspects is important to my own survival and to helping me realize the world I want to see, and there's no other way for me to get there.

With RiteAid and Bartell Drugs becoming CVS I think we’re down to just Walgreens and CVS. It’s like Pharmacy 2048 out here. Anyone selling event contracts on who wins?

I think we are barreling toward a future where an agent is the frontend for most software products. The companies that will be able to exist in that world are ones that own customer data, have proprietary data themselves, or provide access to gatekept services (e.g. brokerages). There is no moat for solving problems at runtime. Bespoke UI, if necessary, will be generated just-in-time. These big beautiful screens will mostly be used for video consumption.

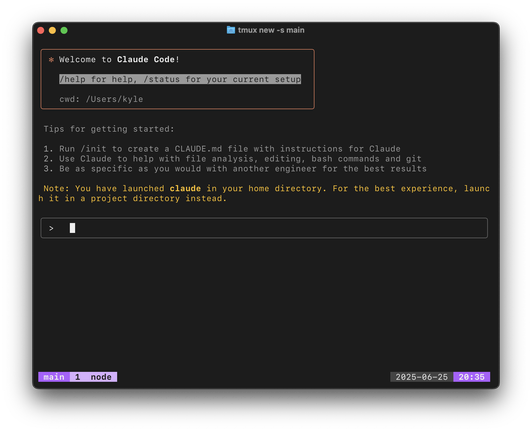

GraphQL via MCP is a slept-on giant. I have never felt like it lived up to its promise for frontend technologies, but letting an agent introspect the schema and dynamically generate scoped queries at runtime is the perfect fit. It amounts to emergent behavior. I used apollo-mcp-server to expose my company’s schema over MCP, then paired that with documents for domain knowledge, as well as schema pattern knowledge, and Claude Code can autonomously use our entire application without a frontend.

I feel like I'm having an out of body experience wherein my whole community is obsessing over Liquid Glass and I'm saying this is the best software interface I have ever used or could even imagine.

I legitimately think that agentic LLMs are the future of personal computers, the new operating system. Using Claude Code to interact with your own software over MCP, and see it autonomously solve problems with it and using it, is transcendent. The rest of the computer feels so antiquated, handmade GUIs feel cumbersome. Our computers will use our computers soon.

Techmeme (@Techmeme@techhub.social)

Anthropic now lets Claude app users build, host, and share AI-powered apps directly in Claude via Artifacts, launching in beta on Free, Pro, and Max tiers (Jay Peters/The Verge)

https://www.theverge.com/news/693342/anthropic-claude-ai-apps-artifact

http://www.techmeme.com/250625/p34#a250625p34

This is funny and topical but no one on Mastodon has listened to a song produced in the last 15 years. My bad.

https://mister.computer/@kyle/114745413864415216Kyle Hughes (@kyle@mister.computer)

Thought I'd end up with Sonnet

But it wasn't a match

Wrote some code with Gemini

Now I'm programming less

Even almost paid two hundred

And for Claude, I'm so thankful

Wish I could say thank you to o3

Cause it was an angel