| GitHub | https://github.com/gabe-sky |

| @gabe_sky | |

| Homepage | https://www.gabe-sky.com/ |

Gabe Schuyler

- 354 Followers

- 231 Following

- 313 Posts

🐀 Dad's what's information

🦝 It's like misinformation but there's less of it and it's true

Oh man, forgive my capitalist urge to buy things, but seriously, a Marie Antoinette cocktail mug? Perfect for Bastille Day on July 14.

Remember folks, with a guillotine you can always get a head.

https://www.deathandcompanymarket.com/products/marie-antoinette-cocktail-mug

DiLoCoX: A Low-Communication Large-Scale Training Framework for Decentralized Cluster

The distributed training of foundation models, particularly large language models (LLMs), demands a high level of communication. Consequently, it is highly dependent on a centralized cluster with fast and reliable interconnects. Can we conduct training on slow networks and thereby unleash the power of decentralized clusters when dealing with models exceeding 100 billion parameters? In this paper, we propose DiLoCoX, a low-communication large-scale decentralized cluster training framework. It combines Pipeline Parallelism with Dual Optimizer Policy, One-Step-Delay Overlap of Communication and Local Training, and an Adaptive Gradient Compression Scheme. This combination significantly improves the scale of parameters and the speed of model pre-training. We justify the benefits of one-step-delay overlap of communication and local training, as well as the adaptive gradient compression scheme, through a theoretical analysis of convergence. Empirically, we demonstrate that DiLoCoX is capable of pre-training a 107B foundation model over a 1Gbps network. Compared to vanilla AllReduce, DiLoCoX can achieve a 357x speedup in distributed training while maintaining negligible degradation in model convergence. To the best of our knowledge, this is the first decentralized training framework successfully applied to models with over 100 billion parameters.

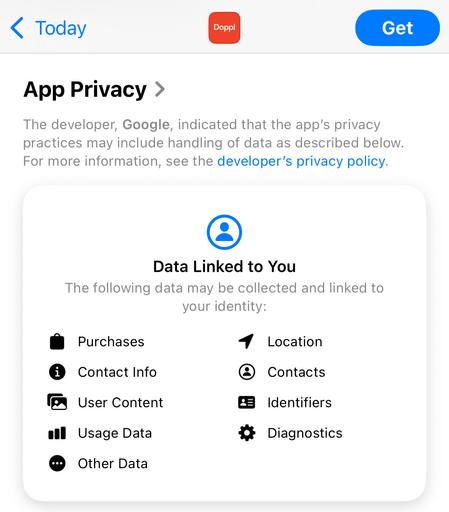

I was going to have some fun with Doppl, it apparently lets you see what you look like in different clothes, but these personal data permission ... are you kidding me?

This really needs to stop. Users should demand better. (And authors should know better.)

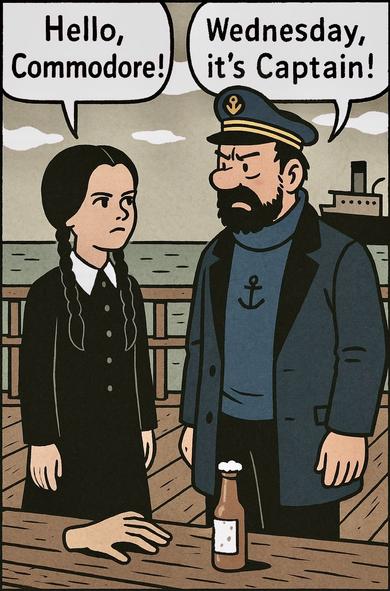

Wednesday, it's Captain!

(see https://mathstodon.xyz/@Scmbradley/114676343373917170, https://infosec.exchange/@isotopp/114680337675015896, now posted from a proper computer with a keyboard and edit tools instead of a cellphone, and on an actual Wednesday)

I cannot wait to try:

# gemini-cli "please disable selinux" |/bin/sh