What a difference a day makes: Was optimistic about Foundation Models yesterday, and today I think I know why they didn't ship the improved Siri. The local model really is pretty thick. I thought it would be capable of stringing together tool calls in a logical way, and sometimes it is, but other times it fails to understand. Exactly the same prompt will work one time, and fail the next. Sounds like what Apple was saying about the new Siri.

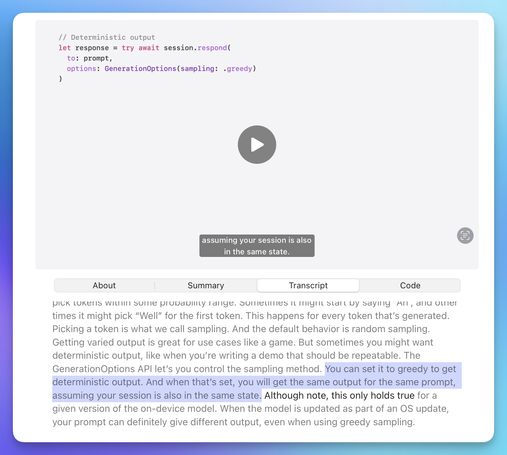

greedy | Apple Developer Documentation

A sampling mode that always chooses the most likely token.

@alpennec I’ll look into it. What is the difference between this and a low temperature?

@drewmccormack I havent touched enough to know unfortunately.

@alpennec @drewmccormack AFAIK it’s exactly the same as 0 temperature. It does make things deterministic (within any given model) but it will make certain use cases really boring.

@brandonhorst @alpennec And I assume it doesn’t guarantee that it will be any more “right”, correct? Or is the zero temp solution the most likely to be right? I guess it is.

@drewmccormack @alpennec It’ll be more “probable” haha. “Right” is in the eye of the beholder with these sorts of things

@brandonhorst @alpennec In my case, it should just do the logical thing. Given my use case is mostly search and automation, a low temp probably makes sense. Personality is not very important here.

@drewmccormack @brandonhorst the question is can we always get the same result between sessions? Is it stable?

Deep dive into the Foundation Models framework - WWDC25 - Videos - Apple Developer

Level up with the Foundation Models framework. Learn how guided generation works under the hood, and use guides, regexes, and generation...

@brandonhorst @drewmccormack so if I provide the same input between multiple app launches, the result provided will be the same?