@drewmccormack Honestly, I’m not surprised: I’ve been playing around with various local models on my Mac and even 16÷24B parameters versions of well respected models are hugely inconsistent and basically a general disappointment over Big AI.

I appreciate their local models are highly specialised, but 3B parameters really looked like too little to do versatile things.

@drewmccormack Interesting. Maybe it helps out to get a better idea of the limits but someone made an example app to chat with the Foundation model.

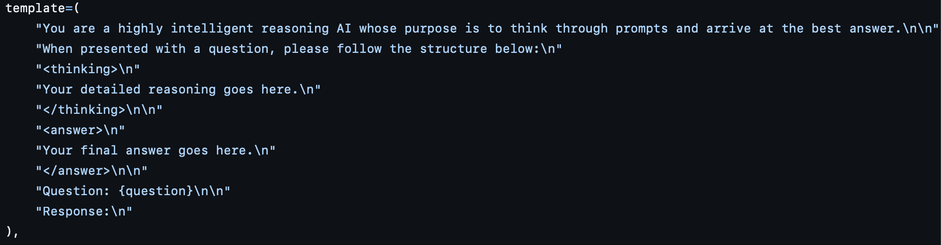

@drewmccormack @obrhoff Yes, you have it do it itself. It's all the same magic trick: telling it to "think" just helps to… influence the conditional output distribution toward tokens that reflect a good answer. LLMs really are just "predicting the next token," so by stuffing the context with relevant information–or having it stuff the context itself–we increase the chances of those next tokens being on-rails.

Caveat: may be less effective with the small context window of Foundation Models.

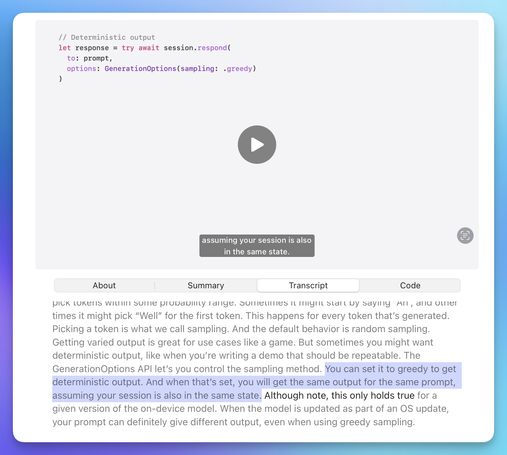

@drewmccormack have you tried to use GenerationOptions(sampling: .greedy)?

https://developer.apple.com/documentation/foundationmodels/generationoptions/samplingmode/greedy