What a difference a day makes: Was optimistic about Foundation Models yesterday, and today I think I know why they didn't ship the improved Siri. The local model really is pretty thick. I thought it would be capable of stringing together tool calls in a logical way, and sometimes it is, but other times it fails to understand. Exactly the same prompt will work one time, and fail the next. Sounds like what Apple was saying about the new Siri.

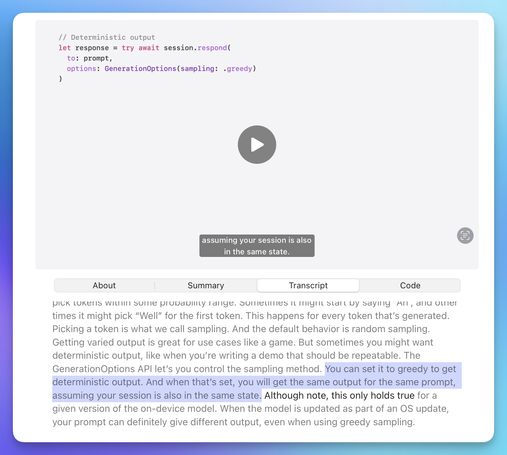

@drewmccormack have you tried to use GenerationOptions(sampling: .greedy)?

https://developer.apple.com/documentation/foundationmodels/generationoptions/samplingmode/greedy