Where should you run LLM-generated code to ensure it's both safe and scalable? And why did we move from a cool in-browser WebAssembly approach to boring, yet reliable, cloud computing?

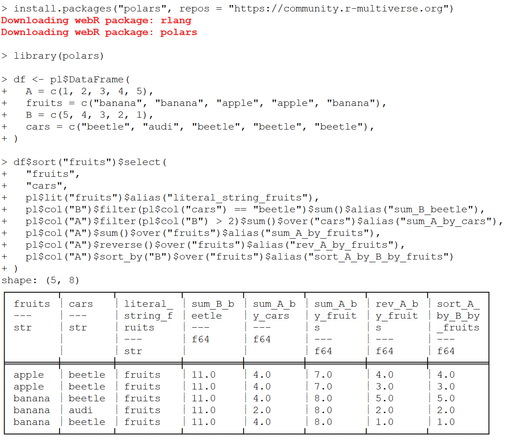

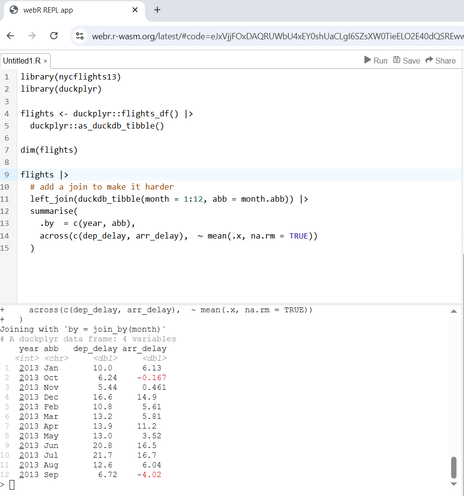

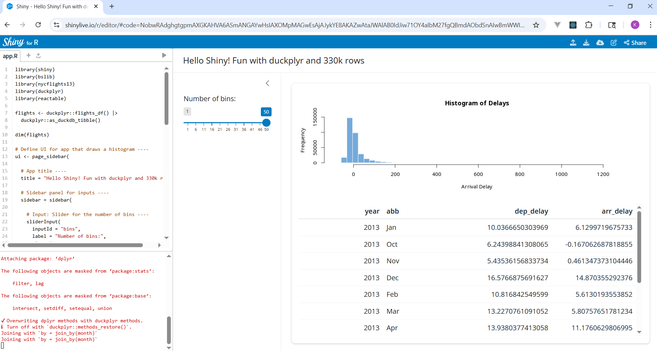

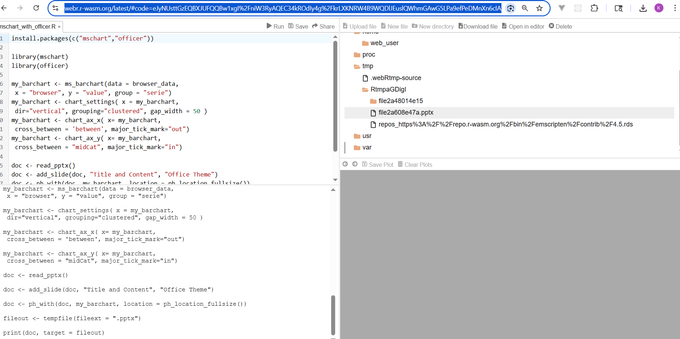

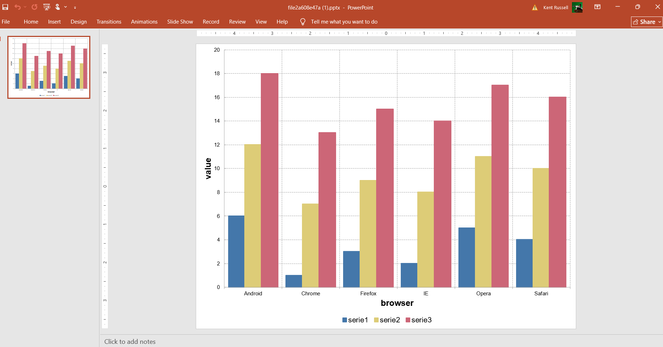

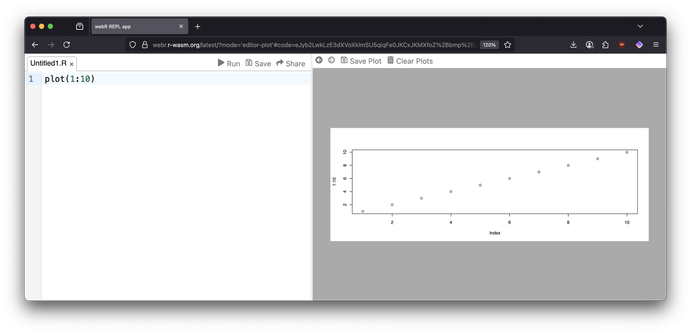

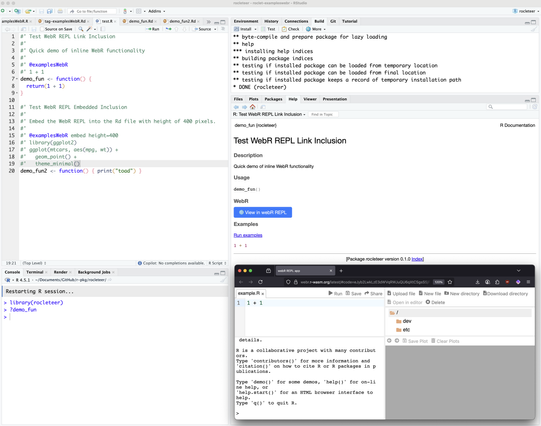

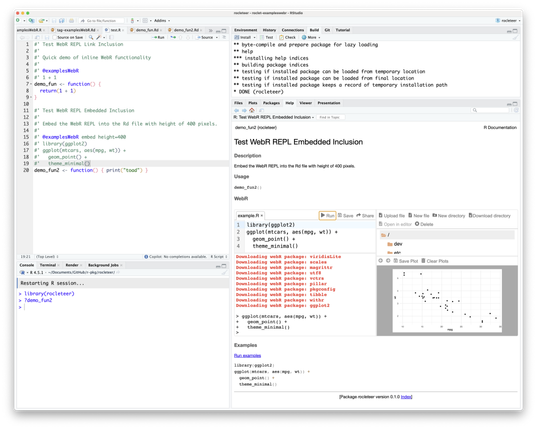

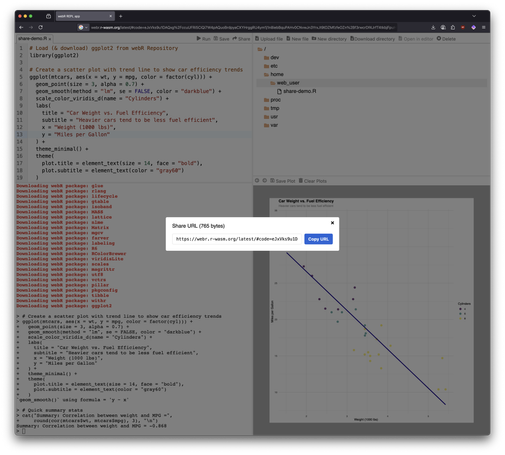

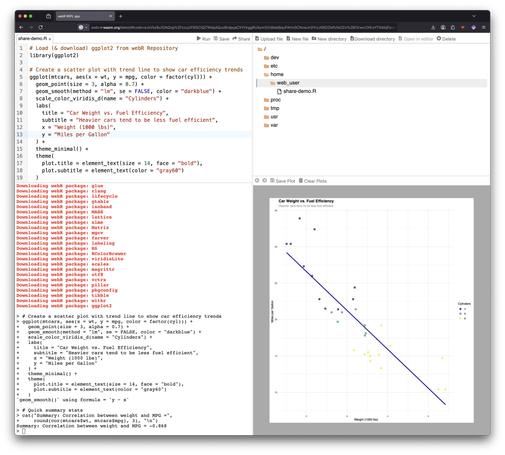

Our AI chart generator taught us that running R in the browser with WebR, while promising, created practical issues with user experience and our development workflow. Moving the code execution to AWS Lambda proved to be a more robust solution.

https://quesma.com/blog-detail/sandboxing-ai-generated-code-why-we-moved-from-webr-to-aws-lambda