Paper page - The Common Pile v...

Paper page - The Common Pile v...

https://www.all-ai.de/news/news24/ki-training-free

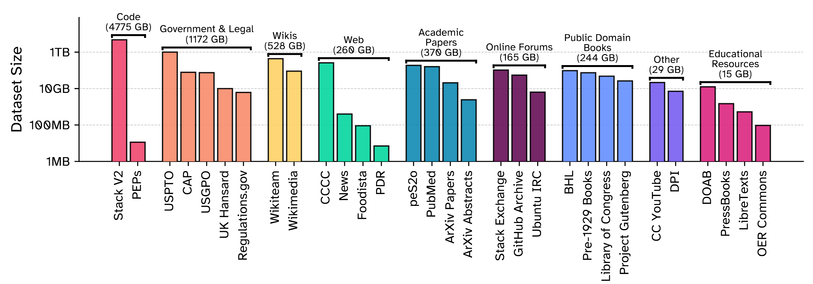

TechCrunch: EleutherAI releases massive AI training dataset of licensed and open domain text. “The dataset, called the Common Pile v0.1, took around two years to complete in collaboration with AI startups Poolside, Hugging Face, and others, along with several academic institutions. Weighing in at 8 terabytes in size, the Common Pile v0.1 was used to train two new AI models from EleutherAI, […]

Researchers have created a dataset for training and evaluating language models without intellectual property infringement!

The Common Pile includes diverse text sources like web content, books, research papers (from PubMed & ArXiv), and online discussions. ArXiv is not peer-reviewed. 🤔 It's designed to be high-quality and reproducible for NLP & LLM research.

Resource:

https://github.com/r-three/common-pile/blob/main/paper.pdf