I shared a post on the OpenAI forum about using Pomerium to secure remote MCP servers with Zero Trust. It’s all open source. We show how to expose MCP servers (like GitHub or Notion) to LLM clients without leaking tokens or re-implementing OAuth.

Happy to chat more over a coffee chat or podcast!

https://community.openai.com/t/zero-trust-architecture-for-mcp-servers-using-pomerium/1288157 #ztna #security #mcp #agenticai

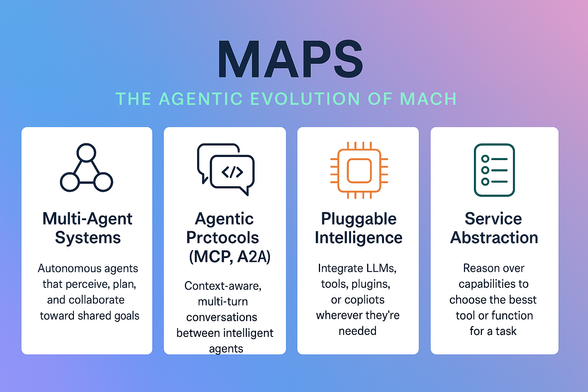

Zero Trust Architecture for MCP Servers Using Pomerium

We’ve been building some open-source tools to make it easier to run remote MCP servers securely using Zero Trust principles — especially when those servers need to access upstream OAuth-based services like GitHub or Notion. Pomerium acts as an identity-aware proxy to: Terminate TLS and enforce Zero Trust at the edge Handle the full OAuth 2.1 flow for your MCP server Keep upstream tokens (e.g., GitHub, Notion) out of reach from clients Our demo app (MCP App Demo) uses the OpenAI Responses API...