An der Bushaltestelle heute morgen warten mehr Leute als sonst. Die meisten schauen in ihre Handys. Ein großes Schild sagt, dass der Bus wegen einer Baustelle umgeleitet wird und diese Haltestelle nicht anfährt. Hat aber keiner bemerkt. Ich mache die Leute darauf aufmerksam, aber sie schauen mich nur irritiert an. Allein eine alte Dame bedankt sich freundlich für den Hinweis und macht sich auf den Weg zur Ersatzhaltestelle. Alle anderen schauen wieder in ihre Handys.

Not sure I understand your question. You can simply prompt a model with the first sentences of, e.g., the US constitution and see how per-word surprisal quickly goes to zero. This is even true in relatively old models like GPT-2. That's not really surprising, but it does raise some methodological issues when using surprisal as an explanatory variable for human language processing.

How do you calculate surprisal for existing real-world texts? Current LLM often recognize them after some words and then surprisal flatlines at zero.

#llm #surprisal #psycholinguisticsTeaching evaluation for my intro stats course this summer: Close to perfect scores in all categories 😊

After 20+ years of laptops as my main computer, I‘m back to a workstation. Really enjoying those 20 CPU cores :)

I'd like to sync my home directory on two computers (laptop for travel, desktop at the office). Is unison still the best solution for that? Anything that I need to pay particular attention to? Any pitfalls that I need to watch out for? Last time I used unison was 10+ years ago.

#ubuntu #linuxMy bank is replacing their so far excellent customer hotline with a chat bot. The result is exactly as infuriatingly bad as you might think. Complete disaster.

Fully-funded PhD position in experimental and/or computational psycholinguistics:

https://tmalsburg.github.io/job_ad_2025_phd.html

Application deadline is August 15.

Fully Funded PhD Position in Experimental and/or Computational Psycholinguistics

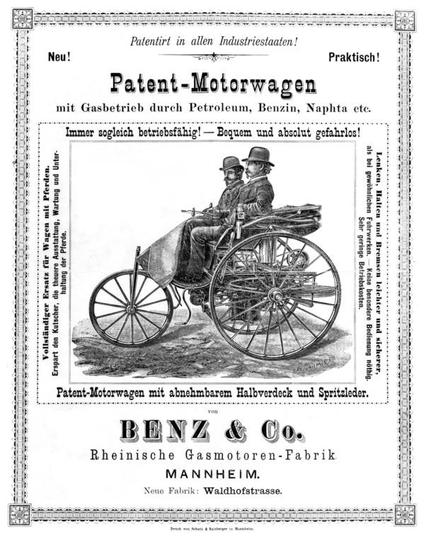

The internal combustion engine is basically a metal box with fire it it. It's almost ridiculously easy to see the many downsides of this technology. Nonetheless, it has become one of the biggest success stories in tech. Reason is an endless stream of small optimizations and clever workarounds. The story of LLM-based AI will be similar. LLM are a technology with many obvious flaws, but over time we will find workarounds and optimizations that make it useful and reliable.

KI: Grok richtet Antworten offenbar daran aus, was Elon Musk gesagt hat

Die Veröffentlichung des neuen KI-Modells von Elon Musks xAI erfolgte nicht ohne Schwierigkeiten. Nun gibt es Fragen, wie sehr sie auf ihn zugeschnitten wurde.