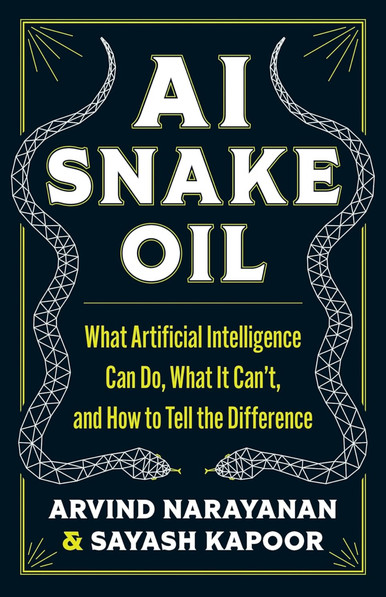

I'm ecstatic to share that preorders are now open for the AI Snake Oil book! The book will be released on September 24, 2024.

@randomwalker and I have been working on this for the past two years, and we can't wait to share it with the world.

Preorder: https://princeton.press/gpl5al2h