| job at | https://www.huygens.knaw.nl/en/ |

| phd at | https://linkedsources.org/ |

| [paused] trombone at | https://www.catchingcultures.com/ |

marnixvb 🌍🌎🌏

- 101 Followers

- 135 Following

- 213 Posts

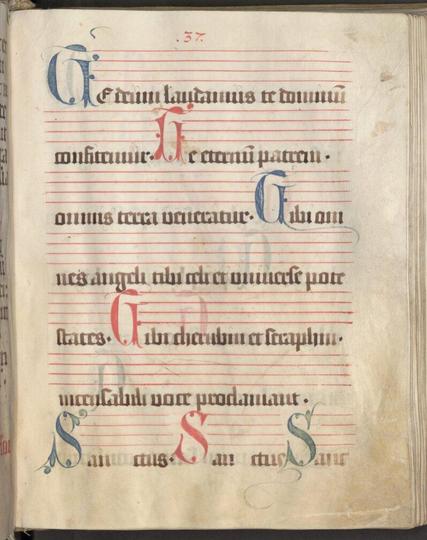

The person doing the music notation had a day off, apparently 🤔

( Vesperale - SuStB Augsburg 2 Cod 511 - https://mdz-nbn-resolving.de/urn:nbn:de:bvb:12-bsb00135266-3 )

In Paris it's 12:00 pm

In Toronto it's 6:00 am

In Washington it's 1930's Germany.

I've identified a way of scaling up human-level general intelligence for much less than the hundreds of billions of dollars OpenAI claim to need to spend.

It's called nursery education and free school meals. It takes about 18 years to take full effect, but if you start now it'll overtake LLMs in usefulness within 1 year.

"LLM did something bad, then I asked it to clarify/explain itself" is not critical analysis but just an illustration of magic thinking.

Those systems generate tokens. That is all. They don't "know" or "understand" or can "explain" anything. There is no cognitive system at work that could respond meaningfully.

That's the same dumb shit as what was found in Apple Intelligence's system prompt: "Do not hallucinate" does nothing. All the tokens you give it as input just change the part of the word space that was stored in the network. "Explain your work" just leads the network to lean towards training data that has those kinds of phrases in it (like tests and solutions). It points the system at a different part but the system does not understand the command. It can't.