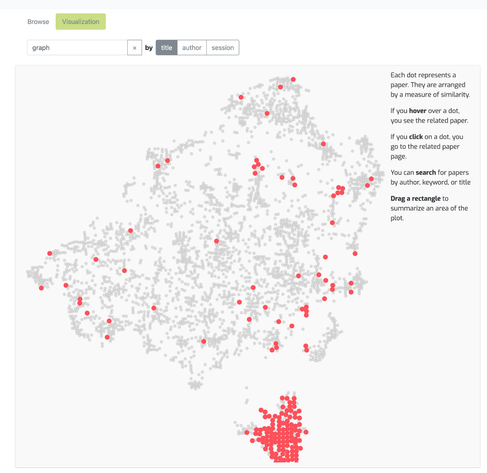

My student Rohit Gandikota is finding that large diffusion models like SDXL can be controlled very precisely with low-rank changes in parameters.

His twitter thread on "Concept Sliders" is also a great survey of current diffusion model controllability work. The nice thing about modern image synthesis is that the results are gorgeous.

Help him spread the word!

https://x.com/RohitGandikota/status/1727410973638852957?s=20

Rohit Gandikota (@RohitGandikota) on X

Have you ever wanted to make a precise change when generating images with diffusion models?🎨 We present Concept Sliders, which enable smooth control to create your vision, fix common problems, and a "fix hands" sliders too. Here's an explainer on how sliders work🧵