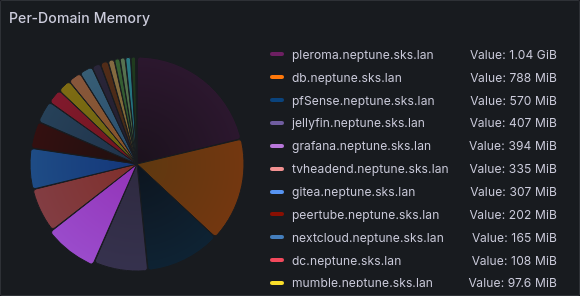

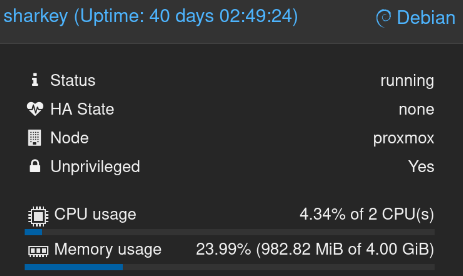

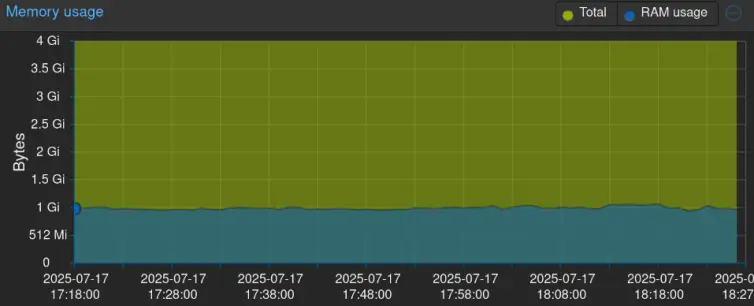

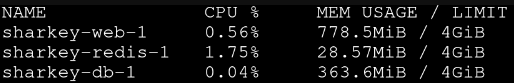

How much RAM is currently using your fedi software, whether it is #Mastodon #Akkoma #GoToSocial or one of the fifty shades of #Misskey (and by RAM I'm meaning "in total", not just the software's main process, also the database and additionnai stuff)

It's really out of curiosity. For instance, soc(dot)breadcat(dot)run currently uses approx 4,5Gb (4Gb for Akkoma's main processes and ~500Mb for the DB, on Docker)

Repost appreciated :nice-three-hearts: