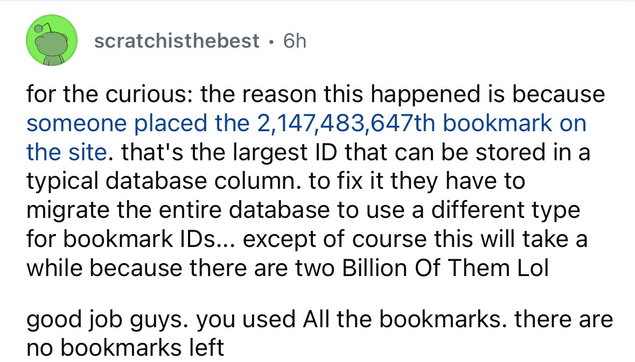

@catgirlQueer @vashti As I myself wrote, years ago,

"At nanosecond resolution (if I've done my arithmetic right), 128 bits will represent a span of 1 x 10²² years, or much longer than from the big bang to the estimated date of fuel exhaustion of all stars. So I think I'll arbitrarily set an epoch 14Bn years before the UNIX epoch and go with that. The time will be unsigned - there is no time before the big bang."

So, yes, if you're content with nanosecond resolution...

https://github.com/simon-brooke/post-scarcity/wiki/cons-space#time

@simon_brooke @catgirlQueer @vashti

https://en.wikipedia.org/wiki/Network_Time_Protocol

NTPv4 introduces a 128-bit date format: [...] According to Mills, "The 64-bit value for the fraction is enough to resolve the amount of time it takes a photon to pass an electron at the speed of light. The 64-bit second value is enough to provide unambiguous time representation until the universe goes dim."

The only larger thing I know of (aside from BIGNUM) is Babbage's design for the Analytic Engine, for which he required 50 decimal digits. This is 166 bits. It is an absolutely insane amount of precision in any time, let alone in 1837, when the grand total of computers in the world is MINUS 100 YEARS. Daft bugger.