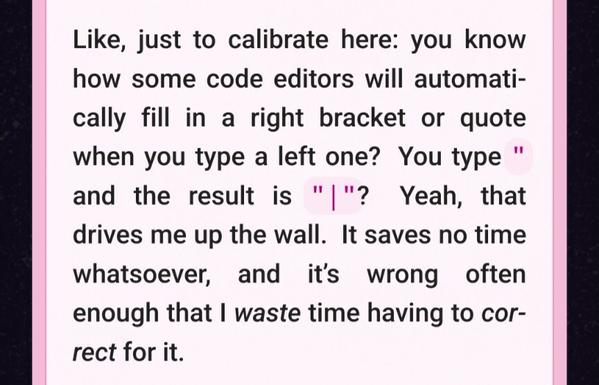

@eevee :0 you don't like closing tab autocomplete?

Like <div>|</div>

Or func(|)

Or var strings =[]{"|"}

@risottobias

@eevee Recently, I managed to configure VS Code to show suggestions (function names and arguments only, no Copilot bullshit) but never complete them unless I explicitly ask it to. Before I managed that, I had to disable the whole feature.

I didn’t want suggestions to save on typing. Programming should be 90% thinking, 10% typing, (and 40% drinking coffee if that’s your thing.) I use them to save on remembering and looking up function signatures. Don’t need an LLM for that.

@risottobias

@eevee it would be nicer if it detected an unbalanced set of divs. some editors when you type ) will automatically recognize that it autocompleted one for you and skip over it instead, some are definitely more primative and just go )) instead :/

definitely something in an editor to tune exactly how you want,

e.g., autoclose brackets, braces, tabs, under what circumstances instead a list or class, etc

auto-bracket lisp :O

@risottobias when i've encountered this feature it usually understands when i type the closing ), but if i go BACK to edit code later — which happens, like, a lot — then it gets hopelessly confused because it just has no way of knowing what i'm trying to do

and </div> is more than one characters so it doesn't understand when i try to type that myself

@eevee @risottobias I usually have it on but it's definitely a borderline one as to if it helps or hinders in a given moment

But, crucially, 1) it's based on a largely predictable algorithm, so it's rarely *surprising* and I know when I'm going to have to dance around pressing *more* keys to correct it, and 2) it's nobody's pet Whatever to pump, so I can just...turn it off, granularly from other IDE smarts, and won't even get a "turn it back on? [yes!] [remind me later]" dialogue tomorrow.

@eevee @risottobias I'm not a programmer. I barely even write code, or script, or whatever. But when I do... That shit drives me up the wall. Glad to finally see someone else talking about it. 😅

I'm about 2/3 of the way through reading, btw, and it's fantastic.

@risottobias @eevee yeah same i dislike it, i think it falls in the same category as autocorrect for me

yes maybe if you build the habit it can be faster most of the time… but “fast” is just the one metric that's easy to collect for something more important: “unfrustrating”. like a drunk looking for their keys near the lamppost because that's where it's easier to look, so does the developer try to optimise the number of keystrokes it takes to complete some specific scenario (instead of doing lengthy qualitative research into uses).

sure auto-close and auto-correct and the like are faster for some specific tasks, and if you get used to it you tend to write so as to stay within these specific tasks or you just accept the frustrating cases that keep coming up because you've built up the muscle memory for the fast cases and at this point it's more frustrating to not use this feature. but often it's worth trying a new tool even though you need to climb that learning curve.

too many typos bc of the small keys on your phone? maybe give a go at alternative keyboards like 8vim and flickboard.

@eevee

> I feel like I get a little dumber every time I accidentally start reading it [LLM output]

so much this!!

@eevee

> [The attitude about LLMs] feels like the same attitude that happened with Bitcoin, the same smug nose-wrinkling contempt. Bitcoin is the future. It’ll replace the dollar by 2020. You’re gonna be left behind.

> What do programmers get out of this?

I think you've struck something deeper, not tied to vested interest.

Isn't this similar to the smug "I use Arch btw" ?

I think I've seen this much earlier, in non-IT context, but I can't recall an example...

religion? 🤔

@eevee

Also you wrote that the LLM hype is rooted in the idea that "doing things is worthless".

To me that sounds awfully like burnout / depression / learned helplessness.

So maybe that's where this comes from? Maybe people have been doing tedious work without seeing any purpose or meaning in it, they got burned out, and now they want a machine to do that job for them...

> What are you all even writing that so much of it consists of generic slop?

IMO many jobs are like that :(

Also, pressure to get the thing to work no matter what is such a killjoy.

It can be external, but it can also be internal - when you know a lot of people rely on you, they don't need to tell you, you just know it.

It makes it seem like the result is all that matters, and then the process itself is no longer enjoyable.

@eevee Your post is 🔥!!!

I'm still holding out hope that the bubble will burst sooo hard before my kids get into school. My head is close to falling off from all the head shaking whenever I read about the shit that's going on schools and universities with LLMs.

I hate the "whatever machine" with the passion of a thousand burning suns. 😬

@eevee I had the strangest experience entering a comment on your post, using firefox for android on a pixel 2, using a really old version of (now Microsoft) Swiftkey (I'm afraid to update anything b/c of business-driven regressions like llm feature tumors)

entering text at the front reversed it character by character & reset the cursor to before the text, unless I typed too fast (I did the first line very slowly w/ much do over). entering text elsewhere or newline caused strange duplications.

@eevee "I mean, I get it. I was trying to do something that had never been done before. LLMs are fine at things that appear a zillion times in their training data — in fact, this is probably a big part of the trick, because the things that appear more often in their training data are the things people are more likely to ask about in general and thus the things people are more likely to ask an LLM."

I've tried to explain this sometimes and people don't seem to get why this makes it useless 😑

@eevee the devaluing of creation really is the crux of it, because that also stops adoption of these things for whatever stuff there actually is they could be useful for by saying "they can do everything for you". we'll likely not see actual uses for these things until the entire current cycle dies down, and at that point they will likely be so constrained that they're not recognisable as 'ai' tools.

like, i sometimes do single-pass image-to-image runs on digital paste-up artworks i try to do, so i have something to paint over without having to repeatedly hunt for the right colour combinations for shadows and such, which i'm really bad at. in that case it acts like a photoshop filter. that's where i see this entire thing going once the hype dies.

@eevee more like one-and-a-half; since the process basically "de-blurs" an image from noise, i can add random noise to my own image and tell the model "okay we're on step 15 of 20, deblur the rest".

the fact that it's basically impossible to avoid plagiarism is why i only do these for myself, as experiments. i don't want to put more slop on the web.

@eevee but yes, it does still go through clip and the feature database, and still requires a prompt. it just skips most of the actual generation.

sometimes it can be fun to just give the model shapes and get it to find things in them, cloud gazing style, like the google thing that saw dogs everywhere.

@eevee it's Guided Whatever. so it's basically the same thing as all the chatbots everyone is getting, but for images.

tangent:

other than doing art experiments just for me, i'm currently studying llms at work so i can stay employable, and going through the code of these systems is depressing. like, i don't have the math brain to actually decipher the vector calculus at the base of the system, but i can churn through all the structure of the system, and... there is none. no organisation, no security consideration, no nothing. it's all research code that escaped.

in no other production ready system have i encountered a function named essentially "load_local_file" which, when called with a string argument representing the path of a local file, does a http call to a proprietary service with an api key embedded in the library, that basically only _looks at the file name_ and says "ok here is 5 GB of extra files for that one file".

deploying these systems in secure contexts (which i know people do) mus be a nightmare.

The fact that you have no idea what will come out the other side actually seems to be part of the appeal − as @davidgerard recently wrote, it's like playing a slot machine: https://pivot-to-ai.com/2025/06/05/generative-ai-runs-on-gambling-addiction-just-one-more-prompt-bro/

@lime

Bullshit makes

the flowers grow

& that's beautiful.

AI slop can't do that.

This, I think the tooling is trying to "shift left" too far (into my head perhaps?). I like the integration of language servers and linters into Vim, they don't run until I drop out of insert mode, or at least until I hit <CR>.

I find the trend to cut out the process and just produce a Whatever so weird. I think the process of creating something is just as interesting as the end product, if not more.

(ST "that said I did have to write this post without either of those" `)))

(v0160 (EXT (ST "(for context, watch the old SICP lectures where they spend way too much time closing brackets)" )))

🍵

🍵