https://futurism.com/commitment-jail-chatgpt-psychosis

https://futurism.com/commitment-jail-chatgpt-psychosis

Wandering into my feed to say, “Oh, but *I* use it and *I’m* okay!" is not going to get you anything but a big ol' block.

Chrissakes, people. Respect yourselves more.

Raised to think that we’re all a bit insane and the dangerous ones think that they’re perfectly okay.

This is quite disturbing. I use it myself but mostly as a ‘private secretary’. I tell it the elements of my project, what I want to do with it and then AI ‘fetches’ turning out organized information from my cues. I do it only to amuse myself…no delusions…yet!

@lilithsaintcrow "As the hype around AI has risen to a fever pitch, many people have started using ChatGPT or another chatbot as a therapist, *often after they were unable to afford a human one.*"

I think I found the root cause of the issue. (Emphasis mine)

Even if you can afford a therapist, they might often be available when you need them.

I know around here there it might be months before you can see someone for the first time.

Where the AI is there all the time consistently, with no cost or having to jump through hoops, seemingly anonymous and no stigma attached.

Cost would be a big issue, but also other factors

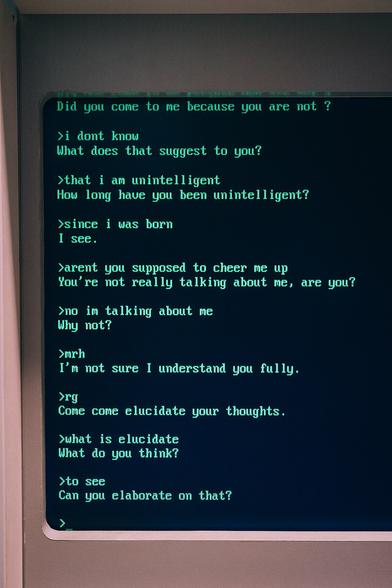

Agreed. ChatGPT is like ELIZA on steroids, which apparently includes the ELIZA effect.

@GalbinusCaeli @nyrath @lilithsaintcrow

> with booster rockets and cocaine

Peanut brain:

"they are talking about Elon Musk"

Normal brain (after reading back up the thread):

"no it's about AI and the ELIZA effect fulfilling personal delusions at great risk to themselves and the people around them"

Galaxy brain:

"they ARE talking about Elon Musk"

And I only use them for silly things, not for anything actually useful.

@lilithsaintcrow I knew chat bots were bad, but this is a whole other level.

I'm bipolar and have experienced hypo-mania, aka mild mania. I know that feeling of grandiose and how special you feel, but not at those levels. If it's anything like that, I understand the allure.

Take it from me, don't let your loved ones use chat bots. It's just another form of designer drugs.