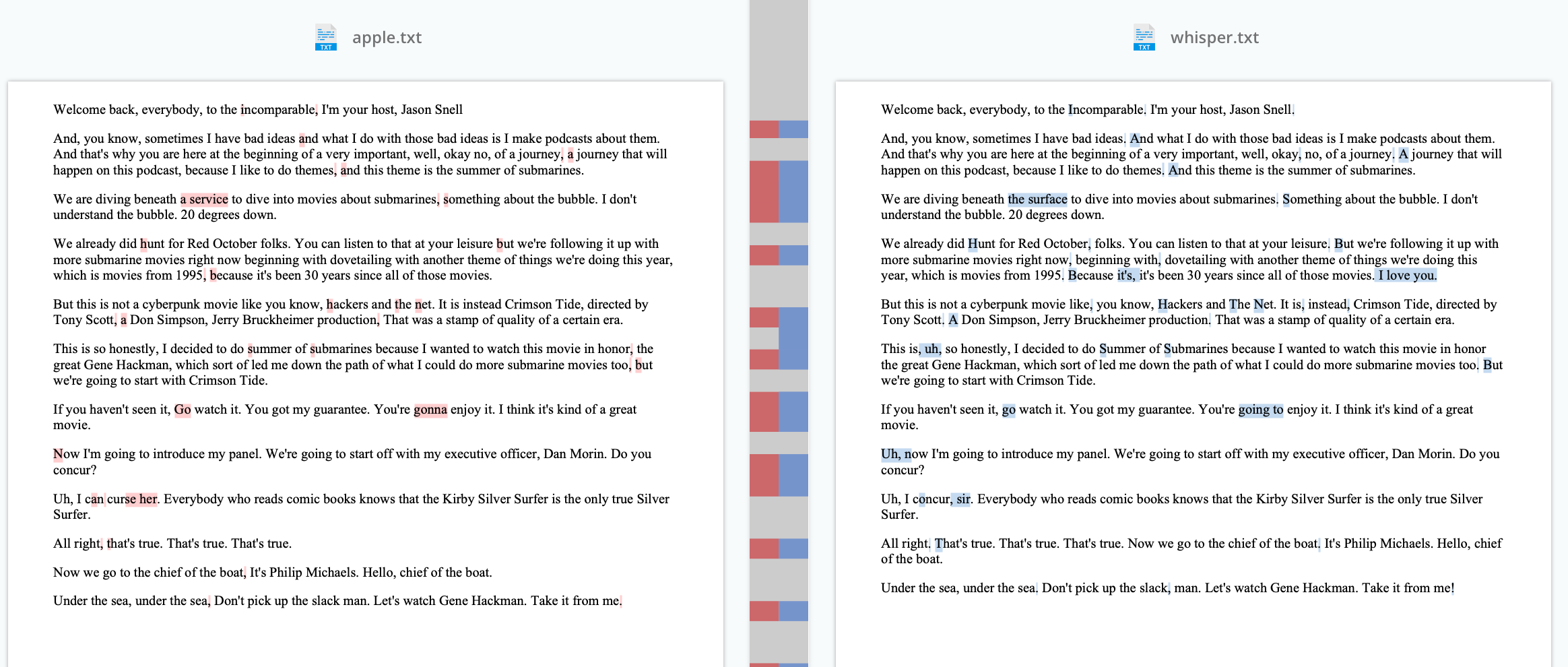

Whisper's large-v3-turbo is definitely quite a bit slower than Apple's new onboard transcription model in 26, though it's also noticeably better, especially at punctuation and capitalization. Comparison image attached - v3-turbo used context to get some key phrases including "beneath the surface" and "I concur, sir" Also Whisper caught some crosstalk, albeit incorrectly ("I love you"). Interesting!

viewable comparison here: https://draftable.com/compare/AbkISTFXdBKF