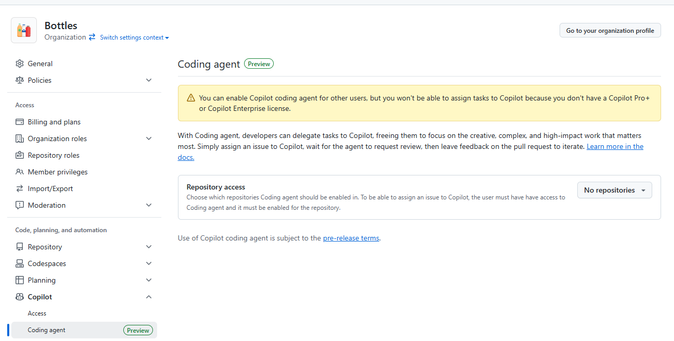

Given GitHub's hostile push for AI, I desperately want to move Bottles's code to GNOME GitLab and keep the Codeberg mirror up.

I'm legit so fucking tired of it. It makes it hard for me to develop Bottles without Copilot spams demotivating me. Their hostile push has gotten like Discord where everything is Nitro COPILOT THIS Nitro COPILOT THAT. I'm stuck here playing the opposite of Where's Wally: as in "try not to find any mentions of Copilot".

It's been bothering me so much that it has become more and more difficult to contribute to projects hosted on GitHub. I also get uncomfortable when I contribute to software mirrored to GitHub, which includes GNOME apps.