AI is a horrible tool in software development.

A team leader explains the problems in this post on Reddit:

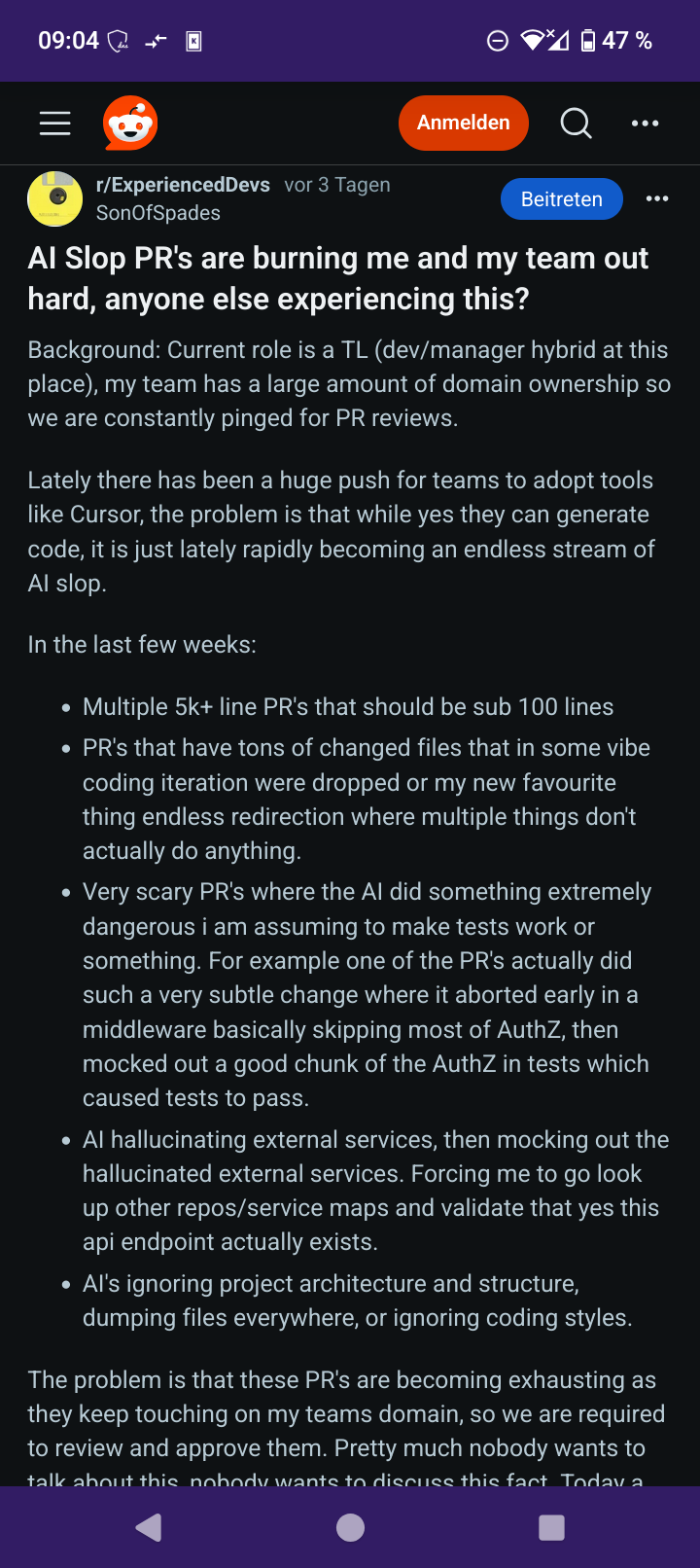

"AI Slop PR's are burning me and my team out hard"

AI is a horrible tool in software development.

A team leader explains the problems in this post on Reddit:

"AI Slop PR's are burning me and my team out hard"

AI is a horrible tool in software development.

A team leader explains the problems in this post on Reddit:

"AI Slop PR's are burning me and my team out hard"

@Lucomo

I personally haven't experienced this a lot but it sounds like something you just have to correct Junior devs not to do. And it will take time. Don't let a junior dev get away with pushing code they don't understand.

If senior devs did this, I'd be very irritated and I'd have a serious conversation with them about it.

@ryanjyoder @Lucomo this very much sounds like a "you're holding it wrong" argument.

The fact that these tools do allow junior devs to submit wild & ungrounded PRs is itself a major, major problem.

@ryanjyoder

I am also not working with any engineers that try to bs me either. But we are not (yet) using LLMs for code generation in our team.

Judging from my LinkedIn feed, however, management may try to encourage the use of LLMs to "increase productivity". So the engineers might even be encouraged to submit bs.

@jaredwhite @Lucomo

@ryanjyoder @Lucomo Speaking aside a former junior dev, we won't create additional files that seem to serve no purpose, and create 5K line code changes.

They tend to be smaller, on account of being smaller features given to us to work on.

@ryanjyoder @Lucomo I have already seen this. It’s not always obvious to untangle since it can appear plausible - it can take more expertise and effort to debug than it would have to write up-front.

I predict a lot of software is going to become janky and unmaintainable as early adopters have false confidence.

People in the comments suggesting, completely seriously, that the solution is to use an AI reviewer to review the PR before a human looks at it.

JFC.

@Lucomo It doesn't have to be this way.

Yes, use AI to help you solve issues in your code, but don't let it write missions critical stuff. I might get it to write unit tests or method comments, but I'm not going to let it to touch any key auth code, for example.

We use Claude / Cursor at work and we don't have these issue (we also don't have any junior devs either so we all know better than submitting a bunch of AI slop for review).

I'd reject these PRs outright (wouldn't even review them), then arrange talks with the developers producing them to educate them on the dangers of submitting AI generated crap.

You're a developer, you write the code and use AI as a tool like any other. It shouldn't be used to replace people, it's not good enough and can actually be dangerous (as noted).

I think most of the problem here is about educating properly. Also raise this with your manager (if they're any good / will do anything about it).

@Lucomo "endless redirection where multiple things don't actually do anything"

Let me guess ... a million to one on this was trained on EJB code.

@Lucomo the only valid solution I see is flatout #ban users for that shit citing it as #vandalism.