GitHub - ContainerSolutions/tinkerbell-rpi4-workflow: Instructions and configuration files to create tinkerbell workflow for raspberry pi 4

Instructions and configuration files to create tinkerbell workflow for raspberry pi 4 - GitHub - ContainerSolutions/tinkerbell-rpi4-workflow: Instructions and configuration files to create tinkerb...

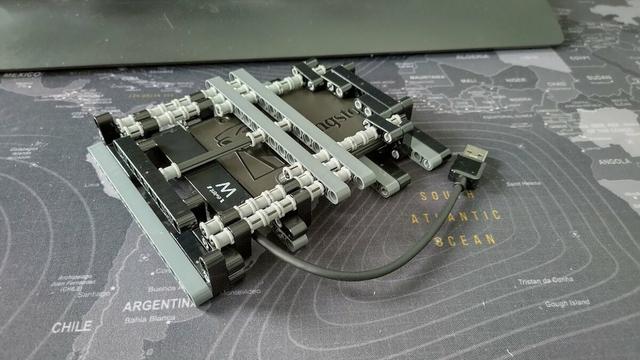

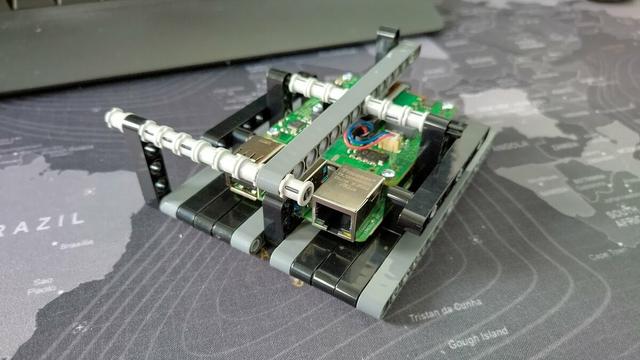

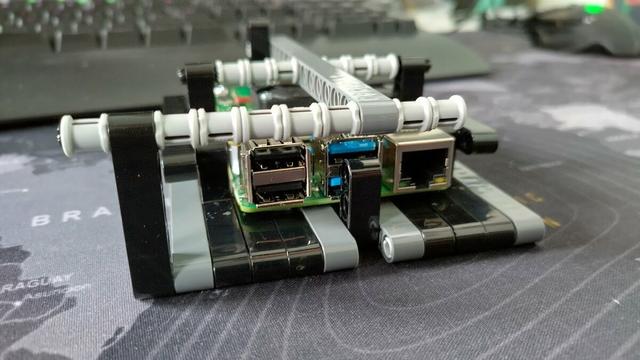

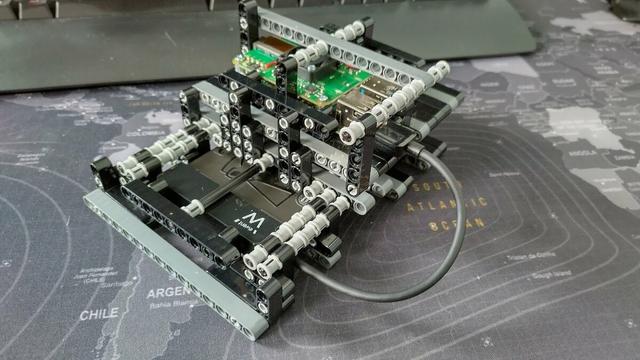

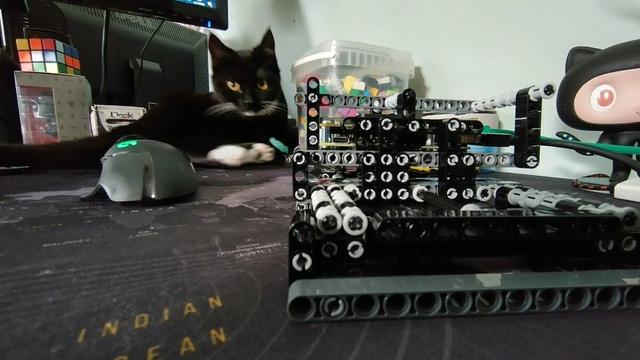

#tinkerbell_oss Got something else to fix for booting from SSD, but will also fix that later.

For now, the next step is getting GitHub Action runners on it to start building applications for it and have a way to deploy directly to it. There are several solutions for that, should be fun :D

If anything, I learned that arm and arm64 support for many Helm charts/Docker images out there aren't as good as I hoped for.

This is partially why I'm doing this project, aside from having some use cases in the house

Essentially lots of Docker images only have an amd64 version, maybe an arm64 but rarely an arm(7) image so running anything on the RPI3's in the cluster is unlikely unless I start building images for it.

Now the GitHub Actions Runner Helm chart I'm using also only has amd64 and

arm64 versions.

So that is going to be fun. The cool thing is setting that up is really really easy, like scary easy. Writing a Helm chart to add all the of the runner deployment and autoscaling definitions for that. Also considering putting them directly in a projects

deployment. But that results in the chicken and egg problem, so either the first deployment to the cluster has to be done manually or I'll have to store them at a central location.

However the first thing on the menu is getting Helm to work and be able to deploy from within the

cluster using a GitHub Actions Runner.

When that works, I'm locking all network and permissions within the cluster and the network as much as possible.

How to correctly fix init.resizefs when booting rpi from ssd · Issue #27 · sgielen/picl-k3os-image-generator

When generating the image for raspberry pi and burning it to a ssd, so that the rpi boots from the usb disk instead of the sd card everything it needs to be changed is this line ('p1' to &#...