https://www.tfeb.org/fragments/2025/11/18/the-lost-cause-of-the-lisp-machines/ #LispMachines #RetroTech #TechFossils #ComputingHistory #90sNostalgia #HackerNews #ngated

make love (i.e. make the file love), it would respond with not war? before proceeding as normal.Someone needs to put this into the

make build utility. #programming #ComputerHistory #ComputingHistoryPeople used to bond multiple 56k modems together to squeeze more speed from dialup. In theory each modem adds another 56 kb per second so the total speed is N times 56 kb per second with no real protocol limit. In practice it quickly becomes messy. You need one phone line per modem, your provider must support multilink PPP, and overhead plus noise cuts the speed down. Most setups only used two to eight modems before cost and complexity made it pointless. At extremes you could run hundreds or even thousands of lines, but power, routing, and line quality would collapse performance. To reach one terabit per second you would need about eighteen million modems drawing roughly one hundred megawatts of power and filling a warehouse of copper. Technically possible in math, completely absurd in reality.

PhD position on the environmental impacts of AI at KTH Environmental Humanities Laboratory as part of a project funded by the research program Wallenberg AI, Autonomous Systems, and Software Program – Humanity and Society (WASP-HS).

The doctoral student will be part of the project “AI Planetary Futures: Climate and environment in Silicon Valley's AI paradigm”, which analyses the climate and environmental aspects of Silicon Valley-based general-purpose AI systems developed by Big Tech companies. It will specifically examine how companies' actions relate to their environmental impact, and the doctoral student will investigate how perceptions of human environmental impact change with accelerating AI implementation. The doctoral student will be affiliated with the WASP-HS Graduate School.

The application deadline is the October 23, 2025.

https://www.kth.se/lediga-jobb/858323?l=en

#envhist #AI #computingHistory #climateChange #SiliconValley

@AmenZwa

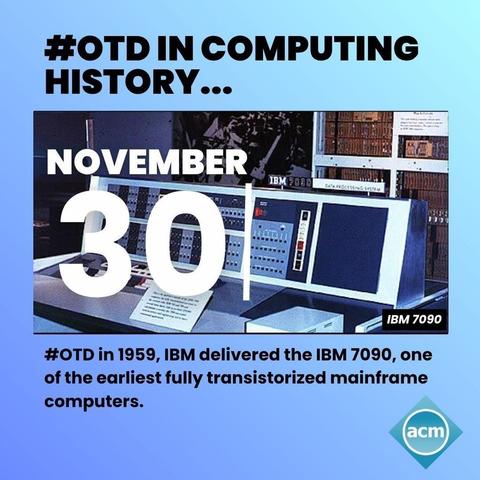

"#OTD in #ComputingHistory in 1956, IBM released the first manual for the programming language FORTRAN."

https://mathstodon.xyz/@ACM@mastodon.acm.org/115379029173813774

BTW re: your book on improving Fortran: Have you implemented it? It sounds like an advantageous language. It's different enough that I'm not sure people would call it Fortran. I was kind of overwhelmed by the amount of thought in it; is it at all backward compatible?

One thing caught my eye that breaks things: row major versus column major: Fortran data is usually arranged to make column major be in sequential RAM and often in cache as well, since Fortran programmers are keenly aware of column major and impacts on runtimes of very long simulations.