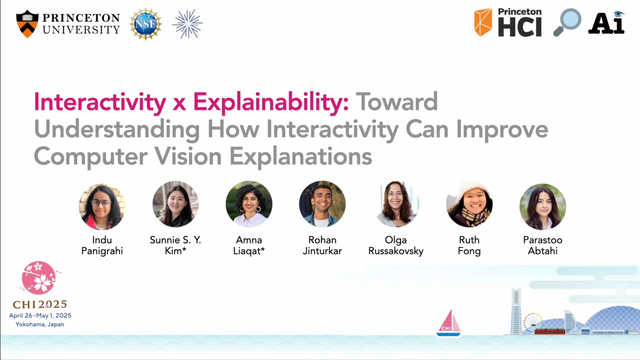

Responsible AI & Human-AI Interaction

Incoming Research Scientist at Apple

Previously: Princeton CS PhD, Yale S&DS BSc, MSR FATE & TTIC Intern

https://sunniesuhyoung.github.io/

| Website | https://sunniesuhyoung.github.io |

| Names | Sunnie (pronounced as sunny☀️), Suh Young, 서영 |

| Pronouns | she/her/hers |