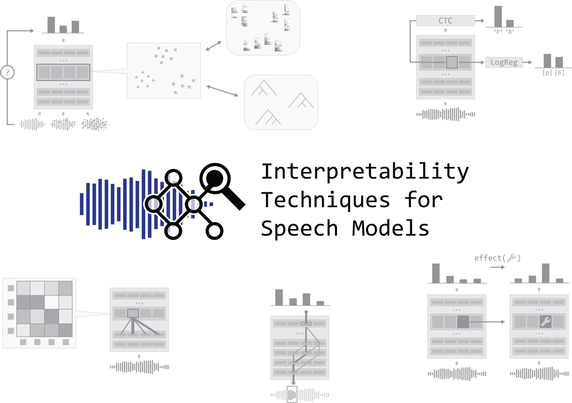

Want to learn how to analyze the inner workings of speech processing models? 🔍

Check out the programme for our tutorial, taking place at this year's Interspeech conference in Rotterdam: https://interpretingdl.github.io/speech-interpretability-tutorial/

The schedule features presentations and interactive sessions with a great team of co-organizers: Charlotte Pouw, Gaofei Shen, Martijn Bentum, Tom Lentz, @hmohebbi, @wzuidema, @gchrupala (and me!). We look forward to seeing you there 😃