Ok with a nice cost-saving PR into nanoGPT as of this morning, finally managed to fit the largest GPT-2 model (gpt2-xl) with vanilla DDP on my 8XA100 40GB node, with batch size 1 and no tricks (e.g. checkpointing etc.). So with batch size of 1M, 300B tokens = 300 iters, at ~17 sec/iter that's... checks notes... 59 days to reproduce GPT-2. Cool.

I hope it's allowed to cross-link to Twitter. I'm not getting banned right?

Not me awkwardly cross-posting my tweets to Mastodon about my new GPT video:

https://twitter.com/karpathy/status/1615398117683388417

Andrej Karpathy on Twitter

“🔥 New (1h56m) video lecture: "Let's build GPT: from scratch, in code, spelled out."

https://t.co/2pKsvgi3dE

We build and train a Transformer following the "Attention Is All You Need" paper in the language modeling setting and end up with the core of nanoGPT.”

When I find a song I like I just put it on repeat for the entire day. Today's (re-)find is Zonnestraal (MÖWE Remix) https://www.youtube.com/watch?v=5HJr3Sxtsvg

This is the coding music to my upcoming best ever tutorial on GPT that I am working on today.

About a year ago I stumbled by a Twitch channel that played this song on a repeat next to video of a cat jamming and singing. I love the internet.

De Hofnar : Zonnestraal (MÖWE Remix)

Even 1 night of GPT training on 1 little GPU that could can produce a decent poet:

And all the land shall be bright

With the looks of a golden light;

And the willows beyond the stream

Shall quiver with the delight

Of music and of winds that gleam

On the dark waters of night;

The original Transformer paper used no biases in the self-attention's Linear layers (producing q,k,v) but the OpenAI gpt2 models seem to have them in the checkpoint. Anyone aware of best practices around the presence of these?

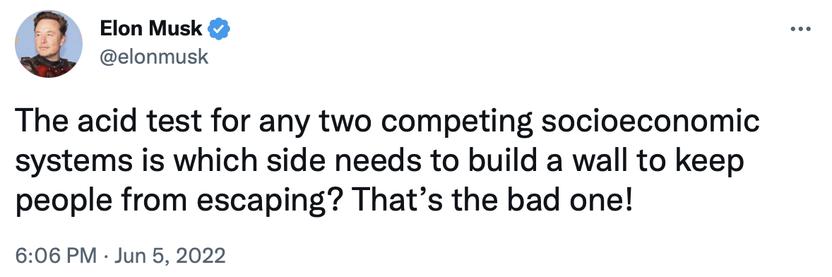

When I read the announcement I immediately thought of this tweet, one of my favorites. Judging by the overwhelming sentiment among friends things look dark atm but my unpopular (?) hope/belief is that Twitter can over time keep the good changes, iterate on the bad ones, and converge towards a global optimum. I hope I'm right let's see. TLDR in the Denial stage of grief :)