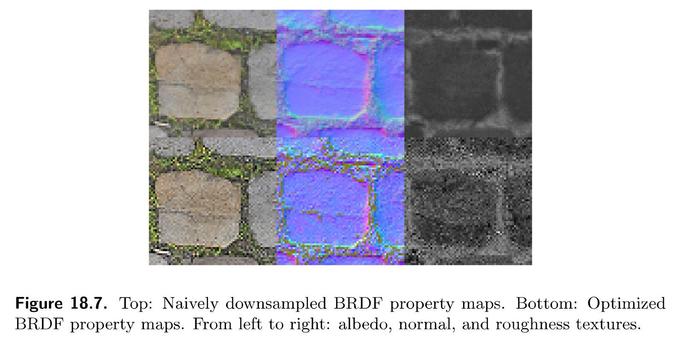

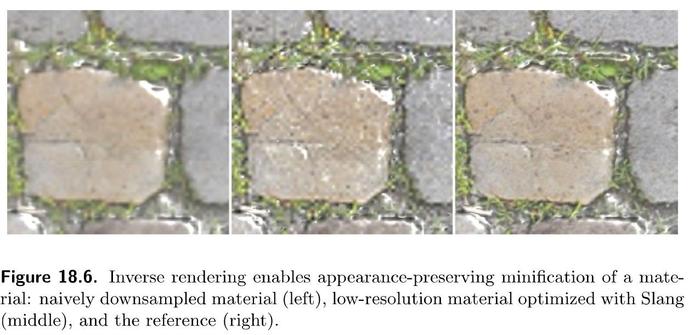

The data-driven approach can work for any BRDF, does not require lossy approximations, and can model spatial effects and relationships. Approaches like Toksvig specular AA only modify roughness. By comparison, we automatically compensate for the loss of sharpness and modify diffuse and normal maps!

One is automatic computing Jacobians of variable transformations common in Monte Carlo integrals.

One is a surprisingly superfast BC compressor that uses SGD.

Finally, the last application replaces analytical material texture mipmap generation (such as LEAN or Toksvig) with a data-driven approach.

Fortunately, you don't have to! With Slang, you can differentiate your existing shader code. And it's getting a lot of attention, including recent Khronos adoption:

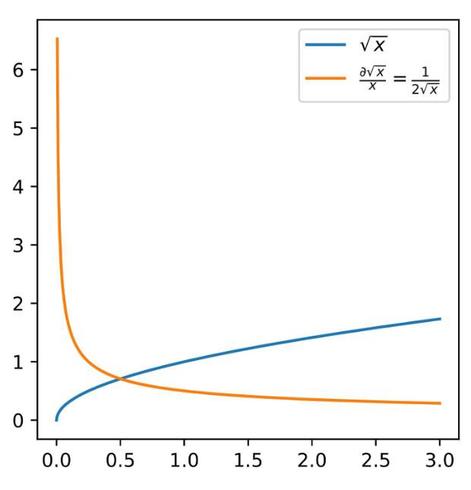

https://www.khronos.org/news/press/khronos-group-launches-slang-initiative-hosting-open-source-compiler-contributed-by-nvidia The ability to compute a gradient of a shader function is not enough to make differentiable programming easy. SGD works great in over-parametrized settings, such as neural networks, but it requires a lot of know-how on avoiding local minima, exploding gradients, and dealing with non-convex problems.

Khronos Group Launches Slang Initiative, Hosting Open Source Compiler Contributed by NVIDIA

The Khronos Group has announced the launch of the new Slang™…

The GPU Zen 3 book is out, perfect timing for the Holiday Season! https://www.amazon.com/GPU-Zen-Advanced-Rendering-Techniques/dp/B0DNXNM14K

So many fantastic-looking articles!

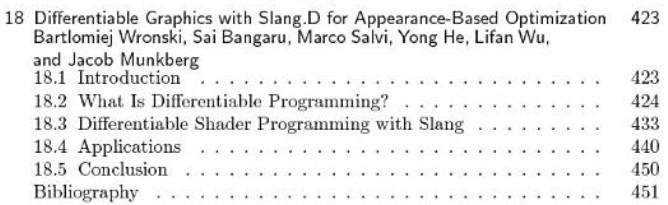

Together with my teammates, we have also contributed a chapter: "Differentiable Graphics with Slang.D for Appearance-Based Optimization".

I keep seeing misinformation about AI being more costly to run (energy-wise) than the revenue it brings. This is not true, but personal biases and shadenfreude is too strong. Yet another proof, though probably will be ignored anyway - for OpenAI, the cost of inference is half of their revenue. (And it is improving month over month)

The cost of new research and training new models is what's so expensive, electricity hungry, and brings them loss.

Haters gonna hate and fill their hearts with schadenfreude, but AI products ARE delivering huge value (not just for the users, but the companies) and revenue boosts.

It's not really my problem, as I am not a hiring manager or a company owner.

Obviously, the level of "seniority" of jobs being eliminated will increase over time, so I am also not "safe". But changes take time, and at some point, I will just retire and focus on my blog, my own coding projects, and hobbies. ¯\_(ツ)_/¯

What can juniors do? The author gives some advice I agree 100% with (image) and even if he's wrong about predictions, it's pretty damn good advice. :)

People like sensational "findings" about the energy use of ML, but luckily, things are not so dire. :)

Image description: A popular sensational "paper" overestimated energy use by just 118000x (yes, *times*). Ooopsie.

Training a transformer produces 2.4 kg of emissions rather than the 284,000 kg estimated by Strubell et al. https://arxiv.org/abs/2104.10350

Carbon Emissions and Large Neural Network Training

The computation demand for machine learning (ML) has grown rapidly recently, which comes with a number of costs. Estimating the energy cost helps measure its environmental impact and finding greener strategies, yet it is challenging without detailed information. We calculate the energy use and carbon footprint of several recent large models-T5, Meena, GShard, Switch Transformer, and GPT-3-and refine earlier estimates for the neural architecture search that found Evolved Transformer. We highlight the following opportunities to improve energy efficiency and CO2 equivalent emissions (CO2e): Large but sparsely activated DNNs can consume <1/10th the energy of large, dense DNNs without sacrificing accuracy despite using as many or even more parameters. Geographic location matters for ML workload scheduling since the fraction of carbon-free energy and resulting CO2e vary ~5X-10X, even within the same country and the same organization. We are now optimizing where and when large models are trained. Specific datacenter infrastructure matters, as Cloud datacenters can be ~1.4-2X more energy efficient than typical datacenters, and the ML-oriented accelerators inside them can be ~2-5X more effective than off-the-shelf systems. Remarkably, the choice of DNN, datacenter, and processor can reduce the carbon footprint up to ~100-1000X. These large factors also make retroactive estimates of energy cost difficult. To avoid miscalculations, we believe ML papers requiring large computational resources should make energy consumption and CO2e explicit when practical. We are working to be more transparent about energy use and CO2e in our future research. To help reduce the carbon footprint of ML, we believe energy usage and CO2e should be a key metric in evaluating models, and we are collaborating with MLPerf developers to include energy usage during training and inference in this industry standard benchmark.

Finally some country ranking in which I can be proud of being Polish :)