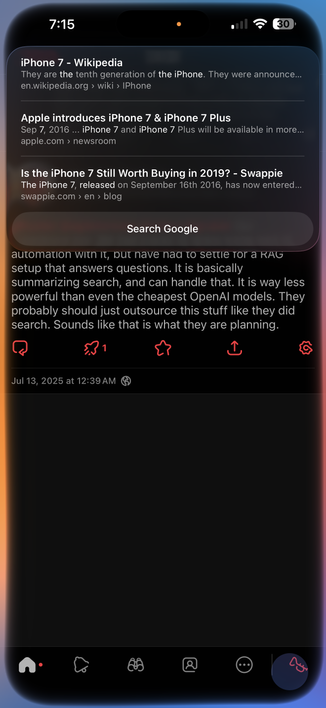

It's not just that Siri is "bad": the issue is that as people get used to basic LLM features, it increasingly feels like a product from 10 years ago.

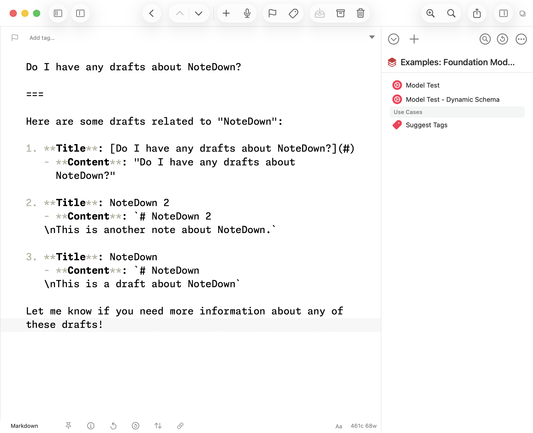

Comparison of asking Siri to find the contents of a note Vs. Notion AI.

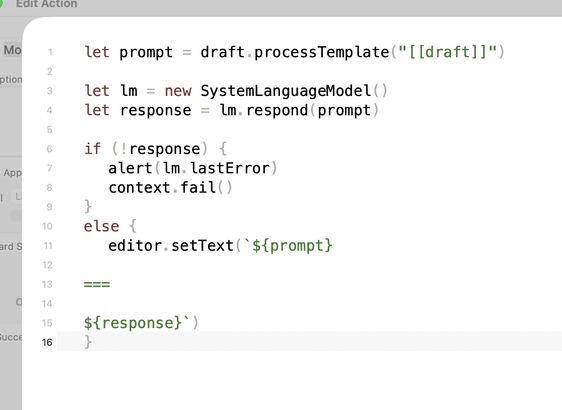

The 'Use Model' action in Shortcuts is a stopgap for power users, not something that most people can approach. Their Foundation models also feel like models from 2/3 years ago.

Apple needs to throw away Siri and replace it with an LLM with App Intents tool-calling ASAP.