@thomasfuchs I can relate to this sentiment, but the paper refutes that "perceived ethicality" (or "perceived capability") explains the discrepancy.

@jedbrown @thomasfuchs Yeah, I think its really just: The more you know about AI, the more you know its present limitations. And you are less suspectible for the hype of future AI and more realistic about what to expect. Which isn't bad at all, even quite promising. But far away from threatening the experience of skilled workers.

Maybe exceeding the management skills of certain CEOs.

@winterayars @jedbrown @thomasfuchs on the other hand, it was also accepted the robots weld cars, instead of letting humans do that. Did not stop my union to become more powerful then ever since. Or resulted in a smaller workforce.

Technology can also lead to more meaningful work and better working conditions. Maybe we should not let CEOs and libertarians decide, what AI can be good for?

@jedbrown @winterayars @thomasfuchs Sorry, but I am not that religious. I don't believe in miracles, not for me, and not for the bad guys.

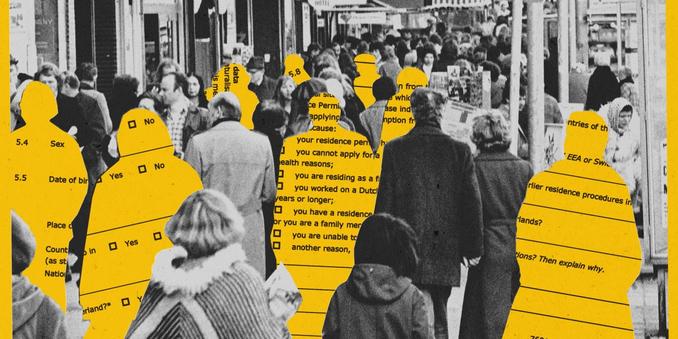

Can't you find a more grounded way how AI can separate and discriminate people, if put into the wrong hands?

https://www.technologyreview.com/2025/06/11/1118233/amsterdam-fair-welfare-ai-discriminatory-algorithms-failure/