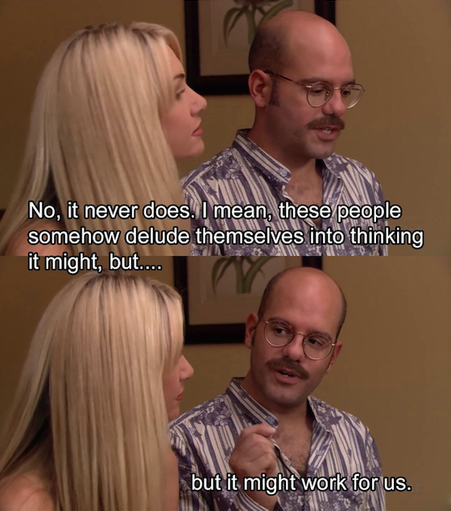

So there’s papers/studies that show:

1. LLMs don’t work (high error rate and making stuff up)

2. Using LLMs reduces your productivity

3. LLMs cannot—ever—be “scaled” to achieve human-level intelligence

4. Most people who speculate in financial bubbles lose their investment

Any questions?