shot, chaser

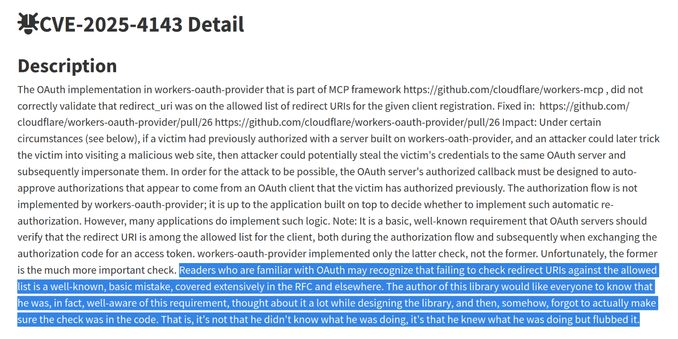

@hailey so he did not, in fact, know what he was doing.

@gsuberland @hailey “the author of this library may possibly have been coding based on vibes as it turns out”

He didn't know what he wasn't doing because knowing what he should have been doing made him think he knew what he was doing.

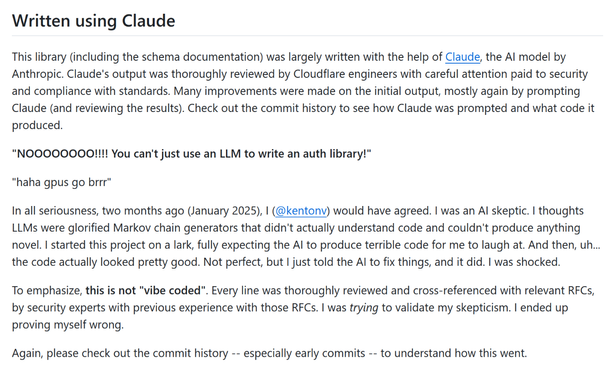

Why you would start a project with possibly error-riddled initial state and then iterate with possibly error-riddled improvements I don't know.

@SorceryForEva @s0 @gsuberland @hailey that's been my biggest objection to using AI as a coding agent for quite a while now.

Personally, I find writing my own code easier and less stressful than debugging others' code.

Similarly, I'd MUCH rather drive my own (sigh) Tesla than "supervise" the idiot Autopilot driving my (sigh) Tesla.

@jimsalter @SorceryForEva @s0 @gsuberland @hailey It hits the deeper problem that humans are much better at recognizing wrong things in front of us than we are at recognizing that something is missing. LLMs don't create that problem but they're a catalyst for hitting it more since they often exude confidence and don't leave the same tells as a human in over their head might leave.

@gsuberland @hailey i recognise the name and i guess we could say "he knew what he wasn't doing"