Digital Humanism Conference 2025

What is the definition of success?

Let’s assume it’s this: hosting the very first Digital Humanism Conference and selling it out weeks in advance. If that’s the benchmark, then #DigHum2025 in Vienna has already nailed it. And not just by the numbers – the energy, the quality of discussions, and the relevance of the topics confirm it: this was something special.

Especially when you consider the context: a conference not about product launches or flashy tech demos, but about something much deeper: how we as a society want to shape our digital future. Technology, absolutely yes. But through the lens of human rights, democracy, and ethics.

Day 1: AI and Society

Monday kicked off with a deep dive into the societal implications of artificial intelligence. The opening day set the tone of critical reflection on the interplay between artificial intelligence and our collective future and brought together political leaders, renowned academics, and cultural voices to examine the sweeping societal, ethical, and ecological implications of AI. From the very first panel to the closing sessions, one message came through loud and clear: technology must serve people – not the other way around.

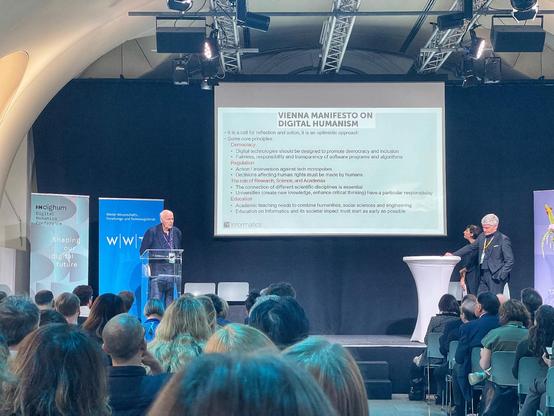

Hannes Werthner, co-founder of the Digital Humanism Initiative, reinforced this by highlighting the contradictions of our digital infrastructure: essential yet monopolized, revolutionary yet deeply flawed. He criticized the privatized foundation of the internet and warned of increasing state and individual disempowerment in the digital age. His reference to the Vienna Manifesto on Digital Humanism served as a call to action – urging academia, policy, and civil society to join forces in shaping responsible technologies.

Hannes Werthner presenting the Vienna Manifesto on Digital Humanism

A major theme throughout the day was AI’s corrosive influence on the democratic discourse. Lawrence Lessig impressed not only with his presentation skills but also with his emotional reaction to the current situation in the U.S., his eyes welling up with tears as he described his country’s descent into fascism. Nonetheless, he brought laughter back to the room when he introduced the audience to a new term: “bespoken reality” – in which citizens no longer share a common understanding of facts. The critique was not anti-technology but deeply political: the current digital architecture, as it stands, undermines public reason. The consensus? We need more face-to-face civic discourse – “not clicktivism”, as Lessig put it.

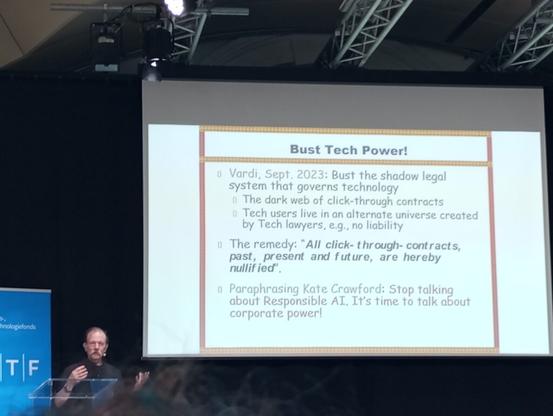

Moshe Y. Vardi delivered a powerful critique of AI’s entanglement with neoliberal economics. He compared the rise of AI to the neoliberal wave that began in the 1980s, both fueling polarization and inequality. Vardi warned against corporate domination of digital infrastructure and called for legal reforms; such as invalidating “click-through contracts” to reassert democratic control.

Moshe Vardi criticizing corporate power

In the afternoon, I joined the workshop that focused on the “eco-social regeneration”, where environmental concerns were central. Speakers from Ars Electronica, the MAK Museum of Applied Arts and artists like Martine Jarlgaard illustrated how tech and art can collaborate to imagine ecologically responsible futures. Sarah Spiekermann-Hoff stayed true to her signature techno-critical tone and gave an intense assessment about our tendency to anthropomorphize AI – a habit she bluntly dismissed as “bullshit”. Machines, she argued, are not thinking beings – they are merely processing data. AI does not act in self-preservation – it simply maximizes outcomes based on the objectives embedded in its algorithms. These objectives are not neutral or inevitable; they are entirely the result of human design choices.

The day concluded with a vibrant discussion about AI’s technical trajectory. Experts such as Edward A. Lee, Ute Schmid, and Michael Bronstein tackled the big question: How far can AI really go? Schmid emphasized that AI lacks intentionality and subjective experience, and Lee questioned whether agentic AI systems (proactive, decision-making machines) should ever be trusted with moral decisions. The conversation also highlighted that AI is powerful not because it mimics human thinking, but because it leverages patterns in data, sometimes in ways that even scientists cannot fully understand. There was a shared belief that AI must remain a tool in the hands of qualified humans, especially in science and education.

Day 2: Platforms and Power

The innovation, investment, and development of AI are currently dominated by China and the U.S. Unfortunately the originally scheduled speaker Yi Zeng, who could have offered valuable insights from a Chinese perspective, had to cancel. However, his replacement Dame Wendy Hall delivered an equally compelling contribution on global perspectives. While AI Safety Institutes in the US and UK, and the EU’s regulatory forums are emerging, they remain limited in scope and inclusivity as the people in the global south are left out. In response, the UN launched its Governing AI for Humanity initiative to support equitable access and raise a global fund. But due to political tensions, the UN now faces financial challenges.

Christiane Wendehorst continued the discussion by presenting the AGIDE project. Contrary to the initial assumption that differing values would drive divergent approaches, their research revealed five recurring narrative patterns that also appear to shift. As a law professor, she emphasized that instead of drafting new regulations, existing frameworks could suffice if interpreted through more inclusive, globally resonant narratives.

Christiane Wendehorst presenting the Narratives of Digital Ethics

The panel “Platforms as new institutions” tackled the growing power of digital platforms, framing them as new institutional actors that increasingly shape public life and norms. Their influence now rivals or even exceeds that of many nation-states. Philipp Staab pointed out that many states perceive themselves in a state of perpetual crisis (climate change, war, economic instability), which makes societies more receptive to technocratic and authoritarian governance. In this vacuum of trust and stability, platform companies step in, offering control, convenience, and structure, all while consolidating their power.

My favourite topic about the governance of AI, frameworks and policies (or, to be more accurate, the lack thereof) was covered in a session that brought together experts from academia (including the already mentioned Dame Wendy Hall, whom King Charles refers to as “the AI-lady”), policymaking (Anja Wyrobek, Legal Policy Adviser at the European Parliament), and international organizations (Paula Hidalgo-Sanchis, UNU Global AI Network Coordinator; and Marc Rotenberg, President and Founder of the Center for AI and Digital Policy). The CAIDP published a comprehensive sourcebook for AI policies, which collects major global and national frameworks.

Panel moderated by

George Metakides I’m so glad I stayed for the panel “Cybersecurity in New Times”. As with many digital issues, cybercrime is set to explode rapidly and unpredictably. Did you know that some of the most intense users of AI today are cybercriminal gangs in Myanmar? While cybercrime is often a “smash and grab” operation (where speed is the key to reselling the stolen assets), Rafal Rohozinski pointed out that the dynamic shifts entirely when state actors get involved. One of his most striking remarks was, that we speak so much about artificial intelligence, but we should really be talking about real stupidity, given that we’ve unleashed an entire digital industry without any form of product liability. I assume he meant the digital ecosystem as a whole, not just AI.

But it doesn’t have to be this way. Optimism came from Matteo Maffei, who highlighted concrete examples like the SCION project in Switzerland. SCION enables secure and resilient data transmission through verifiable, trusted paths and is being used in critical sectors: finance, healthcare, government, and the energy sector. SCION-based networks help shield vital infrastructure from cyberattacks. It’s a powerful reminder that with the right vision and investment, a more secure and sovereign digital future is entirely possible – provided there is the willpower, a solid concept and political agreement.

Day 3: Disruptive Innovation

The third day was marked by discussions on intersectionality, diversity, inclusion, and transdisciplinary approaches to technology (particularly AI) development and innovation. In his keynote speach, MIT professor Michael Cusumano gave an overview of the evolution and interdependencies among key companies and platforms shaping the AI landscape. A particularly striking point was the pivotal role NVIDIA has played in the development of generative AI. He also raised several critical big-picture concerns: content generation versus ownership, the concentration and misuse of platform power, the challenges for regulation or self-regulation, and the environmental impact. Although the trajectory of energy consumption was significantly changed by DeepSeek. Through reinforcement learning they demonstrated that large models could be trained far more efficiently. This not only challenged industry norms, but also put pressure on OpenAI by cutting prices for tokens to only a fraction.

Bashar Nuseibeh addressed the ethical and social responsibilities of software engineering, as every research and technology embodies values – consciously or not. He advocated for a more transdisciplinary approach: putting together diverse teams with different social norms to collaboratively solve social problems. A highlight of his talk was the exploration of overlooked contexts, such as animal privacy. While the notion might seem amusing at first (who wants to say goodbye to random cat pictures on the internet?), it raises serious and thought-provoking questions: how do we design technology for beings that cannot give consent, or in contexts where consent is difficult or impossible to extract? This example underscored the broader point that ethical technology design must account for diverse, often overlooked perspectives.

The panel “Reimagining AI through Social Innovation” brought together very different researchers who showcased ways to engage the public in the AI discourse. Gabriella Waters presented her work that uses neurodiversity as a framework to improve AI systems. Neurodivergent individuals process information differently, may have unique communication preferences, sensory experiences, and executive functioning styles. These insights can be used for the design and development of more inclusive, adaptive, and capable AI technologies.

Mirko Schäfer reported on the widespread usage of AI in public management in the Netherlands, where applications range from waste management and predictive maintenance of infrastructure to identifying so-called “problem addresses”. This increasing reliance on AI also raises serious ethical concerns. One particularly severe case he discussed was the Dutch child-benefit scandal, in which a risk assessment algorithm (intended to detect fraud) wrongly flagged thousands of families, leading to devastating consequences that are still felt today: “Lives were destroyed”. Although the initial response focused on repair, the severity of harm pushed the conversation toward long-term systemic renewal. One of those was f.e. the The Algorithm Register of the Dutch government.

From breakage to repair to renewal – methodes from the Dutch government

In the afternoon, every GDPR fan’s biggest crush, Max Schrems, joined the panel “From Social Media to AI: Lessons Learned”. Moderated by Noshir Contractor and joined by Chris Bail and Sunimal Mendis, the discussion unpacked the tangled evolution from social media regulation to the looming challenges of AI governance. Schrems, never one to mince words, reminded the audience that many legal frameworks already exist – some of them even dating back to the Roman empire – but they’re simply not enforced. He got the impression that since about last year many companies don’t even pretend to care anymore. Their attitude has shifted to a blunt “We’ll just do it anyway”. Chris Bail described design tweaks that could have vastly different impacts. F.e. On the (notoriously toxic) platform Nextdoor simply prompting users with “Would you like to rephrase this more kindly?” drastically reduced toxic posts.

From social media to AI: No lessons learned

The final panel I attended was “AI and Democracy: From Values to Action”. It brought together a group of experts (read: doers) exploring how democratic values can be translated into concrete action. From Austria to India, Ghana, and the UK, the speakers shared real-world experiences of fighting for fair and inclusive AI. The Austrian NGO epicenter.works is actively lobbying for fundamental rights in digital spaces. Siddhi Gupta shared insights from the practical work at the Inclusive AI Lab and the underrepresentation of the global south. Teki Akuetteh, Founder and Executive Director of the Africa Digital Rights Hub, called out the imbalance: while Africa has pioneered innovations like mobile payments, it still remains largely on the receiving end of AI developments. The benefits flow mainly to infrastructure-rich economies. Michael Veale emphasized the need for AI literacy, meaning not only technical know-how, but critical thinking skills that empower citizens to question, challenge, and reshape these systems.

Practitioners sharing how to put ideals into action

Takeaways

After three intense days packed with information, encounters, and ideas, I’m still processing. The range of topics was vast, sometimes overwhelming, always engaging. This (admittedly long) post captures just a fraction of what truly stayed with me. There was so much more: inspiring sessions I couldn’t fit in here, spontaneous conversations, and the in-between moments where I connected with brilliant, like-minded people. It was thought-provoking, energizing, and a reminder of how vital these spaces for exchange really are.

The Digital Humanism Conference wasn’t just about critique – it was about agency. About reclaiming the digital for the public good. About putting people, not profit, at the center of our digital future.

Three packed days, dozens of powerful talks, and one clear message: the future is still ours to shape!

Me and ICT4D lecturer

Anna Bon PS: Finally a scientific conference where I could fully thrive on fantastic vegan food – shoutout to Rita bringt’s! If only I had a button to mute everyone who uses the word “sustainability” with meat on their plate… but that’s a conversation for another day. 😉

Further readings:

Books about Digital Humanism

#conference #DigHum2025 #digitalHumanism